)

) 北京师范大学中国基础教育质量监测协同创新中心, 北京 100875

收稿日期:2020-04-27出版日期:2021-02-15发布日期:2020-12-29通讯作者:李凌艳E-mail:lilingyan@bnu.edu.cn基金资助:国家社会科学基金一般项目“基于大数据循证的学校治理现代化研究”(20BGL234)Preventing and detecting insufficient effort survey responding

ZHONG Xiaoyu, LI Mingyao, LI Lingyan( )

) Collaboration Innovation Center of Assessment toward Basic Education Quality, Beijing Normal University, Beijing 100875, China

Received:2020-04-27Online:2021-02-15Published:2020-12-29Contact:LI Lingyan E-mail:lilingyan@bnu.edu.cn摘要/Abstract

摘要: 问卷调查是心理与教育领域十分常见的数据收集方法, 而被试的不认真作答可能导致问卷数据失真。回顾已有研究发现:(a)不认真作答可以从外在作答模式和内在产生原因两个方向进行定义; (b)不认真作答的常见事前控制方法主要包括降低任务难度以及提高被试作答动机两大类; (c)事后识别方法主要包括嵌入识别量表、作答模式识别、反应时识别三大类。今后的研究中应基于作答机制的研究优化与开发控制方法, 检验作答识别方法的跨情境适用性并开发新方法, 并对局部不认真的识别与处理进行更深入的探讨。

图/表 4

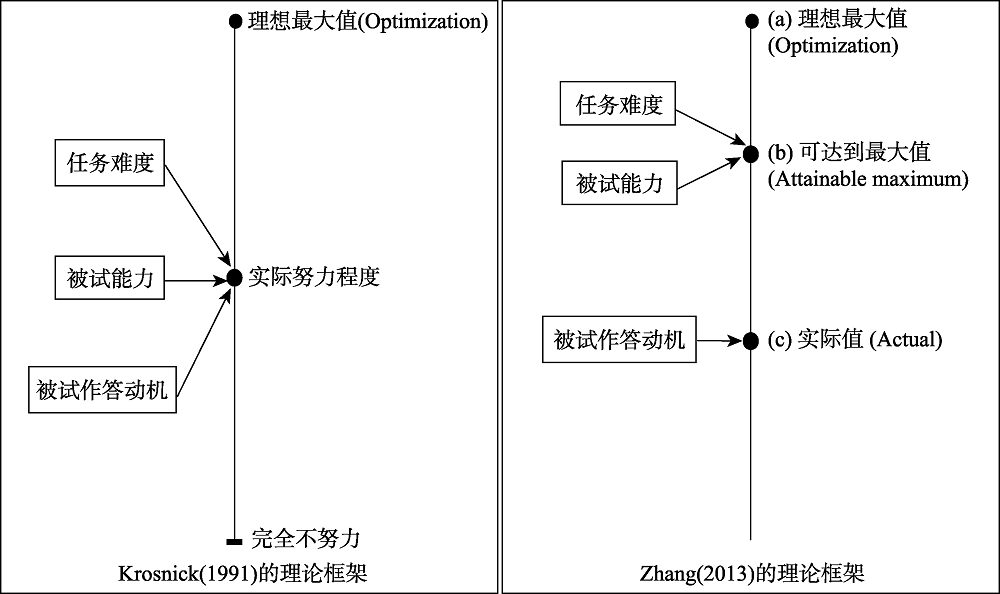

图1各类作答模式示例

图1各类作答模式示例

图1各类作答模式示例

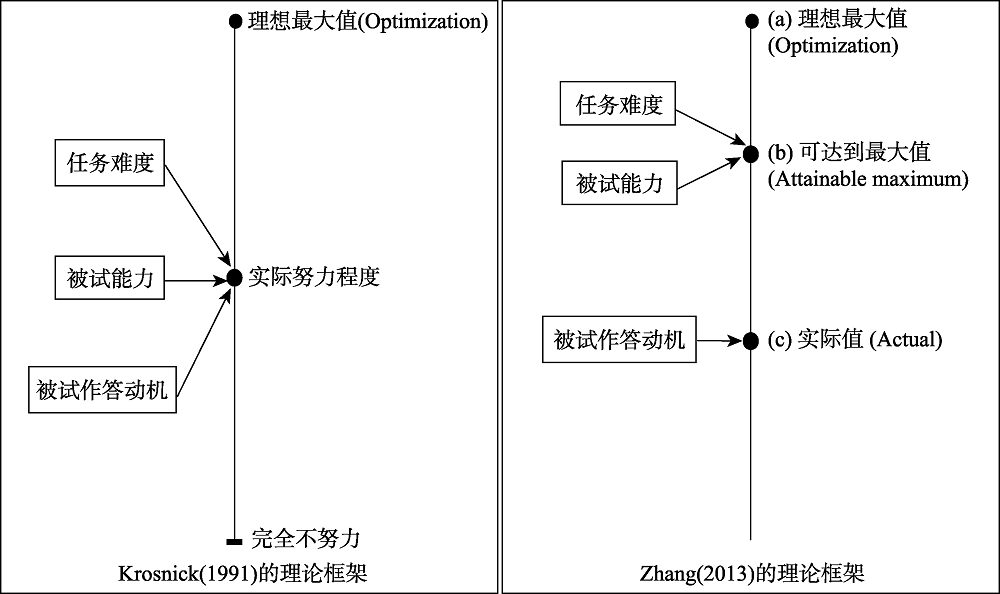

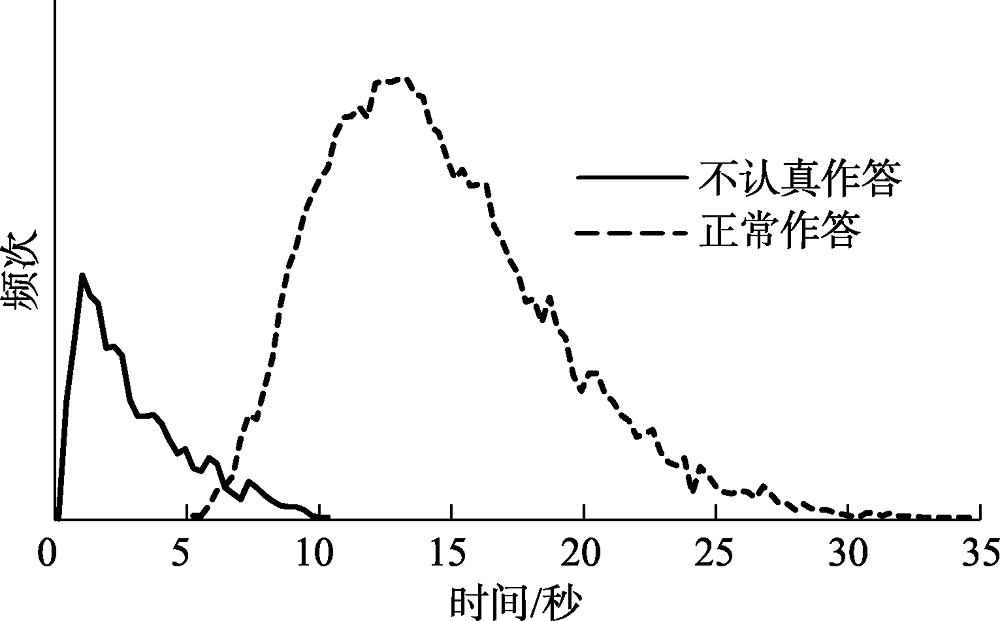

图2Krosnick (1991)及Zhang (2013)的理论框架 图片来源: Zhang, 2013

图2Krosnick (1991)及Zhang (2013)的理论框架 图片来源: Zhang, 2013

图2Krosnick (1991)及Zhang (2013)的理论框架 图片来源: Zhang, 2013表1离群反应时上下阈值(H?hne & Schlosser, 2018)

| 文献来源 | 阈值下限 | 阈值上限 |

|---|---|---|

| Mean - (2 × SD) | Mean + (2 × SD) | |

| Q.50 - (1.5 × IQR) | Q.50 + (1.5 × IQR) | |

| Q.50 - (1.5 × (Q.50 - Q.25)) | Q.50 + (1.5 × (Q.75 - Q.50)) | |

| Q.50 - (3 × (Q.50 - Q.25)) | Q.50 + (3 × (Q.75 - Q.50)) | |

| Q.01 | Q.99 |

表1离群反应时上下阈值(H?hne & Schlosser, 2018)

| 文献来源 | 阈值下限 | 阈值上限 |

|---|---|---|

| Mean - (2 × SD) | Mean + (2 × SD) | |

| Q.50 - (1.5 × IQR) | Q.50 + (1.5 × IQR) | |

| Q.50 - (1.5 × (Q.50 - Q.25)) | Q.50 + (1.5 × (Q.75 - Q.50)) | |

| Q.50 - (3 × (Q.50 - Q.25)) | Q.50 + (3 × (Q.75 - Q.50)) | |

| Q.01 | Q.99 |

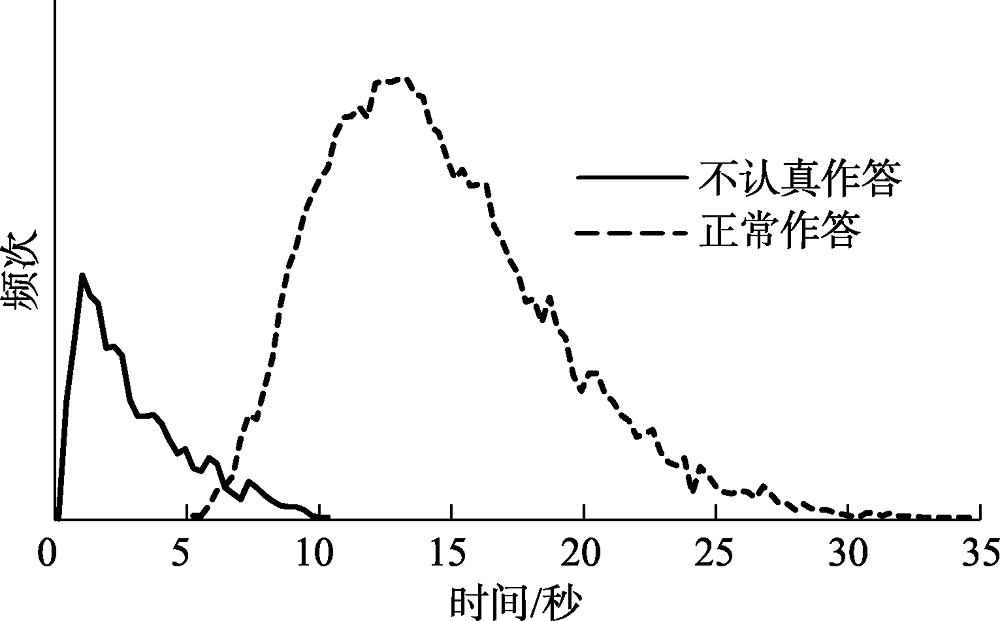

图3快速猜测(不认真)作答和正常作答的反应时理论分布

图3快速猜测(不认真)作答和正常作答的反应时理论分布

图3快速猜测(不认真)作答和正常作答的反应时理论分布参考文献 83

| [1] | 车文博. (2001). 心理咨询大百科全书. 杭州: 浙江科学技术出版社. |

| [2] | 刘蔚华, 陈远. (1991). 方法大辞典. 济南: 山东人民出版社. |

| [3] | 牟智佳. (2017). MOOCs学习参与度影响因素的结构关系与效应研究——自我决定理论的视角. 电化教育研究, 38(10), 37-43. |

| [4] | 王俪嘉, 朱德全. (2009). 中小学教师对待公开课态度的调查研究. 上海教育科研, (8), 28-31. |

| [5] | 卫旭华, 张亮花. (2019). 单题项测量: 质疑、回应及建议. 心理科学进展, 27(7), 1194-1204. |

| [6] | 姚成, 龚毅, 濮光宁, 葛文龙. (2012). 学生评教异常数据的筛选与处理. 牡丹江师范学院学报(自然科学版) (3), 7-8. |

| [7] | 郑云翔, 杨浩, 冯诗晓. (2018). 高校教师信息化教学适应性绩效评价研究. 中国电化教育, (2), 21-28. |

| [8] | Anduiza, E., & Galais, C. (2017). Answering without reading: IMCs and strong satisficing in online surveys. International Journal of Public Opinion Research, 29(3), 497-519. |

| [9] | Baer, R. A., Ballenger, J., Berry, D. T., & Wetter, M. W. (1997). Detection of random responding on the MMPI-A. Journal of Personality Assessment, 68(1), 139-151. URLpmid: 16370774 |

| [10] | Barge, S., & Gehlbach, H. (2012). Using the theory of satisficing to evaluate the quality of survey data. Research in Higher Education, 53(2), 182-200. doi: 10.1007/s11162-011-9251-2URL |

| [11] | Beach, D. A. (1989). Identifying the random responder. The Journal of Psychology, 123(1), 101-103. |

| [12] | Berry, D. T., Wetter, M. W., Baer, R. A., Larsen, L., Clark, C., & Monroe, K. (1992). MMPI-2 random responding indices: Validation using a self-report methodology. Psychological Assessment, 4(3), 340. |

| [13] | B?rger, T. (2016). Are fast responses more random? Testing the effect of response time on scale in an online choice experiment. Environmental and Resource Economics, 65(2), 389-413. |

| [14] | Bowling, N. A., Huang, J. L., Bragg, C. B., Khazon, S., Liu, M., & Blackmore, C. E. (2016). Who cares and who is careless? Insufficient effort responding as a reflection of respondent personality. Journal of Personality and Social Psychology, 111(2), 218. URLpmid: 26927958 |

| [15] | Burns, G. N., Christiansen, N. D., Morris, M. B., Periard, D. A., & Coaster, J. A. (2014). Effects of applicant personality on resume evaluations. Journal of Business and Psychology, 29(4), 573-591. |

| [16] | Carrier, L. M., Cheever, N. A., Rosen, L. D., Benitez, S., & Chang, J. (2009). Multitasking across generations: Multitasking choices and difficulty ratings in three generations of Americans. Computers in Human Behavior, 25(2), 483-489. |

| [17] | Cialdini, R. B. (2001). Harnessing the science of persuasion. Harvard Business Review, 79(9), 72-81. |

| [18] | Cibelli, K. L. (2017). The effects of respondent commitment and feedback on response quality in online surveys. (Unpublished doctorial dissertation), University of Michigan, Ann Arbor. |

| [19] | Costa Jr, P. T., & McCrae, R. R. (2008). The Revised NEO Personality Inventory (NEO-PI-R). In G. J. Boyle, G. Matthews, & D. H. Saklofske (Eds.), The SAGE Handbook of Personality Theory and Assessment: Personality Measurement and Testing (pp. 179-198). London: SAGE Publications Ltd. |

| [20] | Credé, M. (2010). Random responding as a threat to the validity of effect size estimates in correlational research. Educational and Psychological Measurement, 70(4), 596-612. |

| [21] | Curran, P. G. (2016). Methods for the detection of carelessly invalid responses in survey data. Journal of Experimental Social Psychology, 66, 4-19. |

| [22] | DeSimone, J. A., DeSimone, A. J., Harms, P. D., & Wood, D. (2018). The differential impacts of two forms of insufficient effort responding. Applied Psychology, 67(2), 309-338. |

| [23] | DeSimone, J. A., Harms, P. D., & DeSimone, A. J. (2015). Best practice recommendations for data screening. Journal of Organizational Behavior, 36(2), 171-181. |

| [24] | Drasgow, F., Levine, M. V., & Williams, E. A. (1985) Appropriateness measurement with polychotomous item response models and standardized indices. British Journal of Mathematical and Statistical Psychology 38, 67-86. |

| [25] | Dunn, A. M., Heggestad, E. D., Shanock, L. R., & Theilgard, N. (2018). Intra-individual response variability as an indicator of insufficient effort responding: Comparison to other indicators and relationships with individual differences. Journal of Business and Psychology, 33(1), 105-121. |

| [26] | Emons, W. H. M. (2008). Nonparametric person-fit analysis of polytomous item scores. Applied Psychological Measurement, 32(3), 224-247. |

| [27] | Evans, J. R., & Mathur, A. (2005). The value of online surveys. Internet Research, 15(2), 195-219. |

| [28] | Fang, J. M., Prybutok, V., & Wen, C. (2016). Shirking behavior and socially desirable responding in online surveys: A cross-cultural study comparing Chinese and American samples. Computers in Human Behavior, 54, 310-317. doi: 10.1016/j.chb.2015.08.019URL |

| [29] | Fang, J. M., Wen, C., & Prybutok, V. (2014). An assessment of equivalence between paper and social media surveys: The role of social desirability and satisficing. Computers in Human Behavior, 30, 335-343. doi: 10.1016/j.chb.2013.09.019URL |

| [30] | Francavilla, N. M., Meade, A. W., & Young, A. L. (2019). Social interaction and internet-based surveys: Examining the effects of virtual and in-person proctors on careless response. Applied Psychology, 68(2), 223-249. doi: 10.1111/apps.v68.2URL |

| [31] | García, A. A. (2011). Cognitive interviews to test and refine questionnaires. Public Health Nursing, 28(5), 444-450. doi: 10.1111/j.1525-1446.2010.00938.xURL |

| [32] | Gough, H. G., & Bradley, P. (1996). The California psychological? inventory manual: Third edition. Palo Alto, CA: Consulting Psychologists Press. |

| [33] | Grau, I., Ebbeler, C., & Banse, R. (2019). Cultural differences in careless responding. Journal of Cross-Cultural Psychology, 50(3), 336-357. |

| [34] | Guttman, L. (1944). A basis for scaling qualitative data. American Sociological Review, 9(2), 139-150. |

| [35] | Guttman, L. (1950). The basis for scalogram analysis. In S. A. Stouffer, L. Guttman, E. A. Suchman, P. F. Lazarsfeld, S. A. Star, & J. A. Clausen (Eds.), Measurement and prediction (pp. 60-90). Princeton, NJ: Princeton University Press. |

| [36] | He, J., & van de Vijver, F. J., R. (2013). A general response style factor: Evidence from a multi-ethnic study in the Netherlands. Personality and Individual Differences, 55(7), 794-800. |

| [37] | He, J., & van de Vijver, F. J., R. (2015a). Effects of a general response style on cross-cultural comparisons: Evidence from the teaching and learning international survey. Public Opinion Quarterly, 79(S1), 267-290. |

| [38] | He, J., & van de Vijver, F. J., R. (2015b). Self-presentation styles in self-reports: Linking the general factors of response styles, personality traits, and values in a longitudinal study. Personality and Individual Differences, 81, 129-134. |

| [39] | He, J., & van de Vijver, F. J., R. (2016). Response styles in factual items: Personal, contextual and cultural correlates. International Journal of Psychology, 51(6), 445-452. URLpmid: 26899311 |

| [40] | Hoaglin, D. C., Mosteller, F., & Tukey, J. W. (2000). Understanding robust and exploratory data analysis. New York, NY: John Wiley. |

| [41] | H?hne, J. K., & Schlosser, S. (2018). Investigating the adequacy of response time outlier definitions in computer-based web surveys using paradata SurveyFocus. Social Science Computer Review, 36(3), 369-378. |

| [42] | Holtzman, N. S., & Donnellan, M. B. (2017). A simulator of the degree to which random responding leads to biases in the correlations between two individual differences. Personality and Individual Differences, 114, 187-192. |

| [43] | Huang, J. L., Curran, P. G., Keeney, J., Poposki, E. M., & DeShon, R. P. (2012). Detecting and deterring insufficient effort responding to surveys. Journal of Business and Psychology, 27(1), 99-114. |

| [44] | Huang, J. L., Liu, M. Q., & Bowling, N. A. (2015). Insufficient effort responding: Examining an insidious confound in survey data. Journal of Applied Psychology, 100(3), 828-845. |

| [45] | Jackson, D. N. (1976). The appraisal of personal reliability. Paper presented at the meetings of the Society of Multivariate Experimental Psychology, University Park, PA. |

| [46] | Jackson, D. N. (1977). Jackson vocational interest survey: manual. Port Huron, MI: Research Psychologists Press. |

| [47] | Johnson, J. A. (2005). Ascertaining the validity of individual protocols from web-based personality inventories. Journal of Research in Personality, 39(1), 103-129. |

| [48] | Kam, C. C. S., & Meyer, J. P. (2015). How careless responding and acquiescence response bias can influence construct dimensionality. Organizational Research Methods, 18(3), 512-541. |

| [49] | Karabatsos, G. (2003). Comparing the aberrant response detection performance of thirty-six person-fit statistics. Applied Measurement in Education, 16(4), 277-298. |

| [50] | Kountur, R. (2016). Detecting careless responses to self-reported questionnaires. Eurasian Journal of Educational Research,(64), 307-318. |

| [51] | Krosnick, J. A. (1991). Response strategies for coping with the cognitive demands of attitude measures in surveys. Applied Cognitive Psychology, 5(3), 213-236. |

| [52] | Lenzner, T., Kaczmirek, L., & Lenzner, A. (2010). Cognitive burden of survey questions and response times: A psycholinguistic experiment. Applied Cognitive Psychology, 24(7), 1003-1020. |

| [53] | Levine, M. V., & Rubin, D. B. (1979) Measuring the appropriateness of multiple-choice test scores. Journal of Educational Statistics 4, 269-290. |

| [54] | Lloyd, K., & Devine, P. (2010). Using the internet to give children a voice: An online survey of 10- and 11-year-old children in Northern Ireland. Field Methods, 22(3), 270-289. |

| [55] | Mahalanobis, P. C. (1936). On the generalized distance in statistics. Proceedings of the National Institute of Sciences of India, 2, 49-55. |

| [56] | Maniaci, M. R., & Rogge, R. D. (2014). Caring about carelessness: Participant inattention and its effects on research. Journal of Research in Personality, 48, 61-83. |

| [57] | Marjanovic, Z., Holden, R., Struthers, W., Cribbie, R., & Greenglass, E. (2015). The inter-item standard deviation (ISD): An index that discriminates between conscientious and random responders. Personality and Individual Differences, 84, 79-83. |

| [58] | Mayerl, J. (2013). Response latency measurement in surveys: Detecting strong attitudes and response effects. Survey Methods: Insights from the Field. Retrieved from https://surveyinsights.org/?p=1063 |

| [59] | McGrath, R. E., Mitchell, M., Kim, B. H., & Hough, L. (2010). Evidence for response bias as a source of error variance in applied assessment. Psychological Bulletin, 136(3), 450-470. doi: 10.1037/a0019216URLpmid: 20438146 |

| [60] | Meade, A. W., & Craig, S. B. (2012). Identifying careless responses in survey data. Psychological Methods, 17(3), 437. URLpmid: 22506584 |

| [61] | Meijer, R. R., & Sijtsma, K. (2001). Methodology review: Evaluating person fit. Applied Psychological Measurement, 25(2), 107-135. |

| [62] | Melipillán, E. R. (2019). Careless survey respondents: Approaches to identify and reduce their negative impact on survey estimates. (Unpublished doctorial dissertation), University of Michigan, Ann Arbor. |

| [63] | Nguyen, H. L. T. (2017). Tired of survey fatigue? Insufficient effort responding due to survey fatigue (Unpublished master’s thesis), Middle Tennessee State University, Murfreesboro. |

| [64] | Niessen, A. S. M., Meijer, R. R., & Tendeiro, J. N. (2016). Detecting careless respondents in web-based questionnaires: Which method to use? Journal of Research in Personality, 63, 1-11. |

| [65] | Oppenheimer, D. M., Meyvis, T., & Davidenko, N. (2009). Instructional manipulation checks: Detecting satisficing to increase statistical power. Journal of Experimental Social Psychology, 45(4), 867-872. |

| [66] | Revilla, M., & Ochoa, C. (2015). What are the links in a web survey among response time, quality, and auto-evaluation of the efforts done? Social Science Computer Review, 33(1), 97-114. |

| [67] | Rousseau, B., & Ennis, J. M. (2013). Importance of correct instructions in the tetrad test. Journal of Sensory Studies, 28(4), 264-269. |

| [68] | Schneider, S., May, M., & Stone, A. A. (2018). Careless responding in internet-based quality of life assessments. Quality of Life Research, 27(4), 1077-1088. URLpmid: 29248996 |

| [69] | Schnell, R. (1994). Graphisch gestützte datenanalyse [Graphically supported data analysis]. München, Germany: Oldenbourg. |

| [70] | Soland, J., Wise, S. L., & Gao, L. Y. (2019). Identifying disengaged survey responses: New evidence using response time metadata. Applied Measurement in Education, 32(2), 151-165. |

| [71] | van der Flier, H. (1980). Vergelijkbaarheid van individuele testprestaties [Comparability of individual test performance]. Lisse, Netherlands: Swets & Zeitlinger. |

| [72] | Velleman, P. F., & Welsch, R. E. (1981). Efficient computing of regression diagnostics. The American Statistician, 35(4), 234-242. |

| [73] | Wang, C., & Xu, G. J. (2015). A mixture hierarchical model for response times and response accuracy. British Journal of Mathematical and Statistical Psychology, 68(3), 456-477. |

| [74] | Ward, M. K., & Meade, A. W. (2018). Applying social psychology to prevent careless responding during online surveys. Applied Psychology, 67(2), 231-263. |

| [75] | Ward, M. K., & Pond, S. B. (2015). Using virtual presence and survey instructions to minimize careless responding on Internet-based surveys. Computers in Human Behavior, 48, 554-568. |

| [76] | Wise, S. L. (2017). Rapid-guessing behavior: Its identification, interpretation, and implications. Educational Measurement: Issues and Practice, 36(4), 52-61. |

| [77] | Wise, S. L., & Demars, C. E. (2006). An application of item response time: The effort-moderated IRT model. Journal of Educational Measurement, 43(1), 19-38. |

| [78] | Wise, S. L., & Kong, X. J. (2005). Response time effort: A new measure of examinee motivation in computer-based tests. Applied Measurement in Education, 18(2), 163-183. |

| [79] | Woods, C. M. (2006). Careless responding to reverse-worded items: Implications for confirmatory factor analysis. Journal of Psychopathology and Behavioral Assessment, 28(3), 186-191. |

| [80] | Yan, T., & Tourangeau, R. (2008). Fast times and easy questions: The effects of age, experience and question complexity on web survey response times. Applied Cognitive Psychology, 22(1), 51-68. |

| [81] | Zhang, C. (2013). Satisficing in web surveys: Implications for data quality and strategies for reduction. (Unpublished doctorial dissertation). University of Michigan, Ann Arbor. |

| [82] | Zhang, C., & Conrad, F. G. (2018). Intervening to reduce satisficing behaviors in web surveys. Social Science Computer Review, 36(1), 57-81. |

| [83] | Zijlstra, W. P., van der Ark, L. A., & Sijtsma, K. (2011). Outliers in questionnaire data: Can they be detected and should they be removed? Journal of Educational and Behavioral Statistics, 36(2), 186-212. |

相关文章 15

| [1] | 刘源. 多变量追踪研究的模型整合与拓展:考察往复式影响与增长趋势[J]. 心理科学进展, 2021, 29(10): 1755-1772. |

| [2] | 温聪聪, 朱红. 随机截距潜在转变分析(RI-LTA)——个案自我转变与个案间差异的分离[J]. 心理科学进展, 2021, 29(10): 1773-1782. |

| [3] | 苏悦, 刘明明, 赵楠, 刘晓倩, 朱廷劭. 基于社交媒体数据的心理指标识别建模: 机器学习的方法[J]. 心理科学进展, 2021, 29(4): 571-585. |

| [4] | 黎穗卿, 陈新玲, 翟瑜竹, 张怡洁, 章植鑫, 封春亮. 人际互动中社会学习的计算神经机制[J]. 心理科学进展, 2021, 29(4): 677-696. |

| [5] | 王珺, 宋琼雅, 许岳培, 贾彬彬, 陆春雷, 陈曦, 戴紫旭, 黄之玥, 李振江, 林景希, 罗婉莹, 施赛男, 张莹莹, 臧玉峰, 左西年, 胡传鹏. 解读不显著结果:基于500个实证研究的量化分析[J]. 心理科学进展, 2021, 29(3): 381-393. |

| [6] | 徐俊怡, 李中权. 基于游戏的心理测评[J]. 心理科学进展, 2021, 29(3): 394-403. |

| [7] | 唐倩, 毛秀珍, 何明霜, 何洁. 认知诊断计算机化自适应测验的选题策略[J]. 心理科学进展, 2020, 28(12): 2160-2168. |

| [8] | 王阳, 温忠麟, 付媛姝. 等效性检验——结构方程模型评价和测量不变性分析的新视角[J]. 心理科学进展, 2020, 28(11): 1961-1969. |

| [9] | 张雪琴, 毛秀珍, 李佳. 基于CAT的在线标定:设计与方法[J]. 心理科学进展, 2020, 28(11): 1970-1978. |

| [10] | 张沥今, 魏夏琰, 陆嘉琦, 潘俊豪. Lasso回归:从解释到预测[J]. 心理科学进展, 2020, 28(10): 1777-1788. |

| [11] | 张龙飞, 王晓雯, 蔡艳, 涂冬波. 心理与教育测验中异常反应侦查新技术:变点分析法[J]. 心理科学进展, 2020, 28(9): 1462-1477. |

| [12] | 黄龙, 徐富明, 胡笑羽. 眼动轨迹匹配法:一种研究决策过程的新方法[J]. 心理科学进展, 2020, 28(9): 1454-1461. |

| [13] | 朱海腾. 多层次研究的数据聚合适当性检验:文献评价与关键问题试解[J]. 心理科学进展, 2020, 28(8): 1392-1408. |

| [14] | 张银花, 李红, 吴寅. 计算模型在道德认知研究中的应用[J]. 心理科学进展, 2020, 28(7): 1042-1055. |

| [15] | 杨晓梦, 王福兴, 王燕青, 赵婷婷, 高春颍, 胡祥恩. 瞳孔是心灵的窗口吗?——瞳孔在心理学研究中的应用及测量[J]. 心理科学进展, 2020, 28(7): 1029-1041. |

PDF全文下载地址:

http://journal.psych.ac.cn/xlkxjz/CN/article/downloadArticleFile.do?attachType=PDF&id=5331