Short Bio

- Han-Jia Ye is an Assistant Researcher in the School of Artificial Intelligence at the Nanjing University (NJU). His major research focuses on machine learning and its applications to data mining and computer vision.

- Han-Jia received his B.Sc. degree from Nanjing University of Posts and Telecommunications, China in June 2013. After that, he became an M.Sc. student in the LAMDA Group led by professor Zhi-Hua Zhou in Nanjing University. From Sept. 2015, Han-Jia started his Ph.D. degree in machine learning under the supervision of Prof. Yuan Jiang and Prof. De-Chuan Zhan. He received his PhD degree at May 2019.

Latest News

-

09/2020: 1 paper accepted by IJCV on generalized few-shot learning.

-

04/2020: 1 paper accepted by TPAMI on heterogeneous few-shot model reuse.

-

03/2020: 2 papers (1 oral and 1 poster) accepted by CVPR 2020.

-

02/2020: 1 arXiv paper on meta-learning.

-

01/2020: 1 arXiv paper on imbalanced deep learning.

-

11/2019: Attending ACML 2019 in Nagoya, Japan.

-

10/2019: 1 paper accepted by TKDE on multiple instance learning w/ novel class.

-

09/2019: Invited talk at a CCF-Big Data workshop (Wuhan, China) on "Multi-Metric Learning for Heterogeneous Data".

-

09/2019: One manuscript with Xiang-Rong Sheng and De-Chuan Zhan is accepted by Machine Learning.

-

07/2019: Joining the Nanjing University (School of Artificial Intelligence) as an Assistant Researcher.

-

05/2019: Successfully defending thesis on "Metric Learning for Open Environment".

-

10/2018: Finished the visiting at Prof. Fei Sha's group in University of Southern California, LA.

Main Research Interests

Learning with Similarity and Distance

-

Han-Jia focuses on finding an adaptive similarity/distance measure between objects to reflect their relationships, i.e., comparing examples in a better way.

Similarity and distance measurement constructs the basis of many learning methods and facilitates real applications as well. Han-Jia analyzes the theoretical foundations of learning a distance measure and explores a unified view to explain complex linkages between objects.

Learning with Limited Data

-

The ability of a model to fit with limited data is essential and necessary due to the instance/label collection cost. How to extract and utilize knowledge from related tasks and domains is the key. Specifically, Han-Jia mainly works on two directions: how to reuse model effectively across heterogeneous tasks, and how to learn meta-knowledge for few-shot learning.

Learning with Rich Semantics

-

Real-world complex environments usually involve complex semantics. Han-Jia trys to discover semantic information from data following a three-step strategy, i.e., combining multiple data sources, exploring/decomposing types of relationship between objects, and automatically selecting over suitable semantic component.

Publications (Preprints)

|

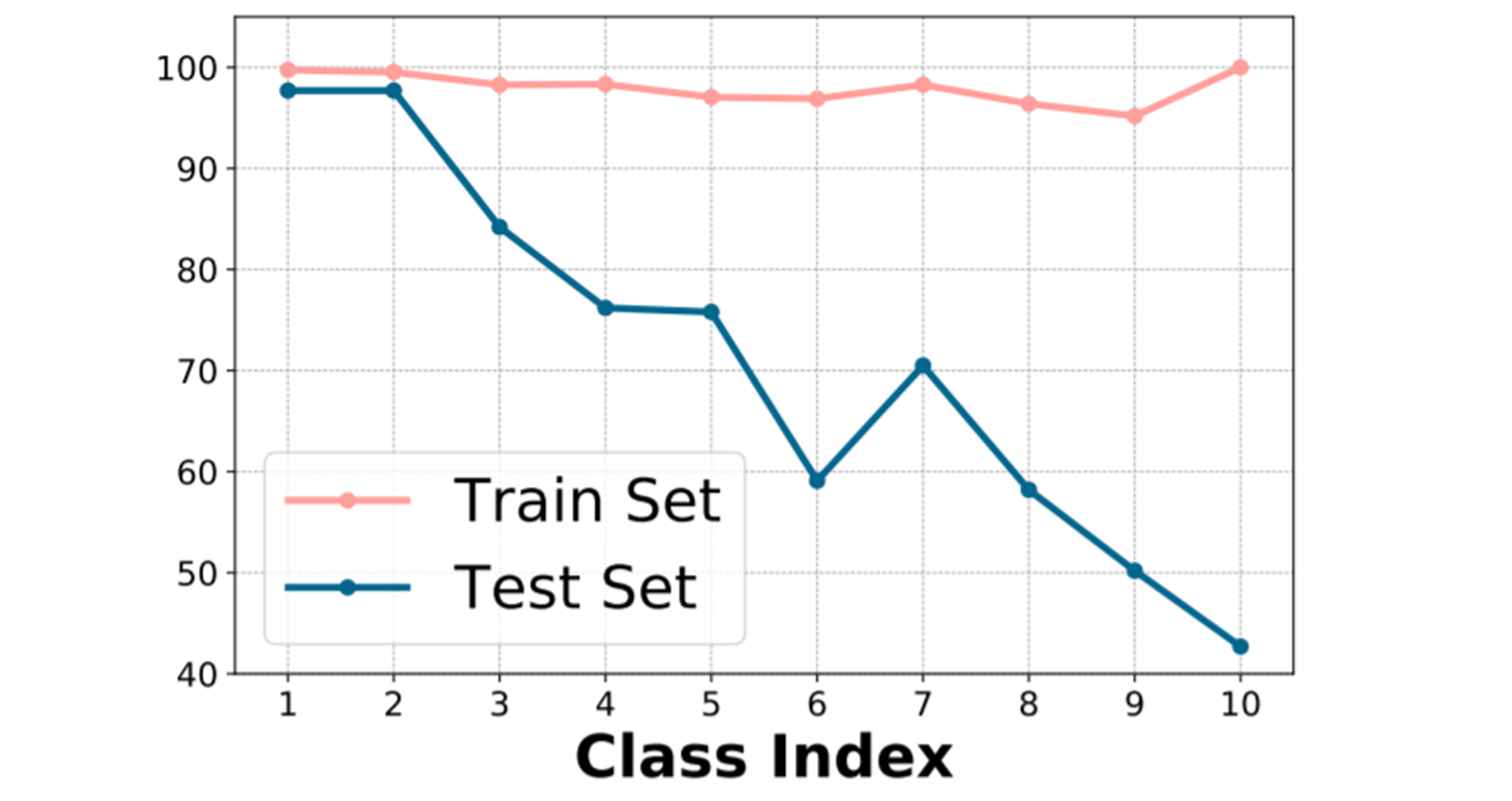

We identify the over-fitting and feature divation phenomena for minor classes in deep long-tail learning, and propose a simple yet effective class-dependent temperature to compensate for such influence. |

|

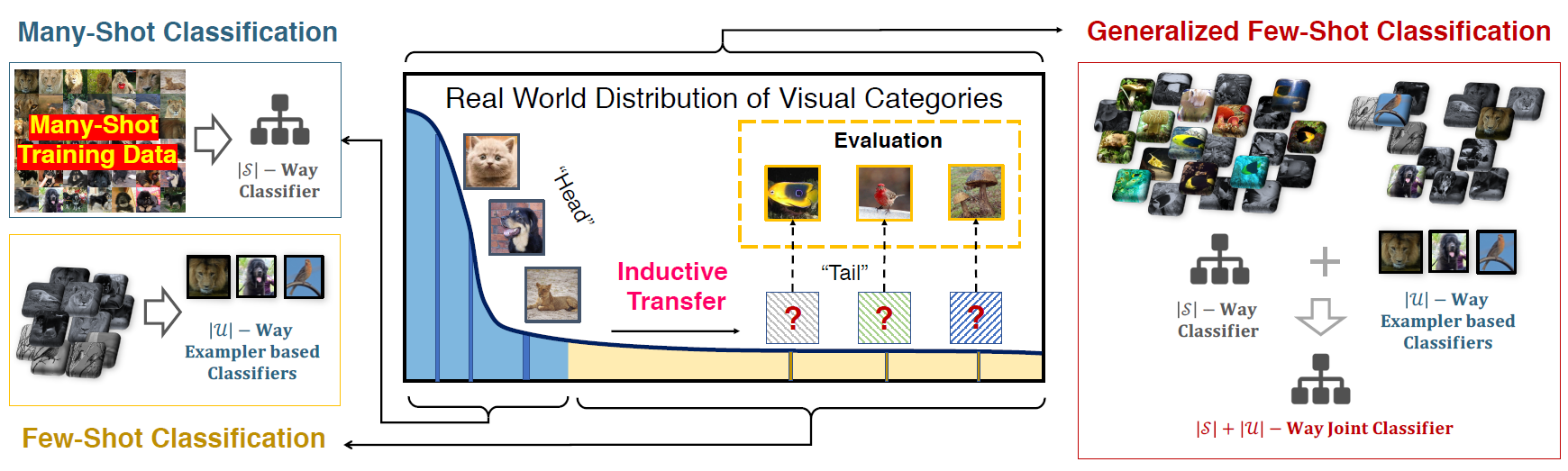

We investigate the problem of generalized few-shot learning and propose a learning framework that learns how to synthesize calibrated few-shot classifiers in addition to the multi-class classifiers of "head" classes. |

Publications (Conference Papers)

|

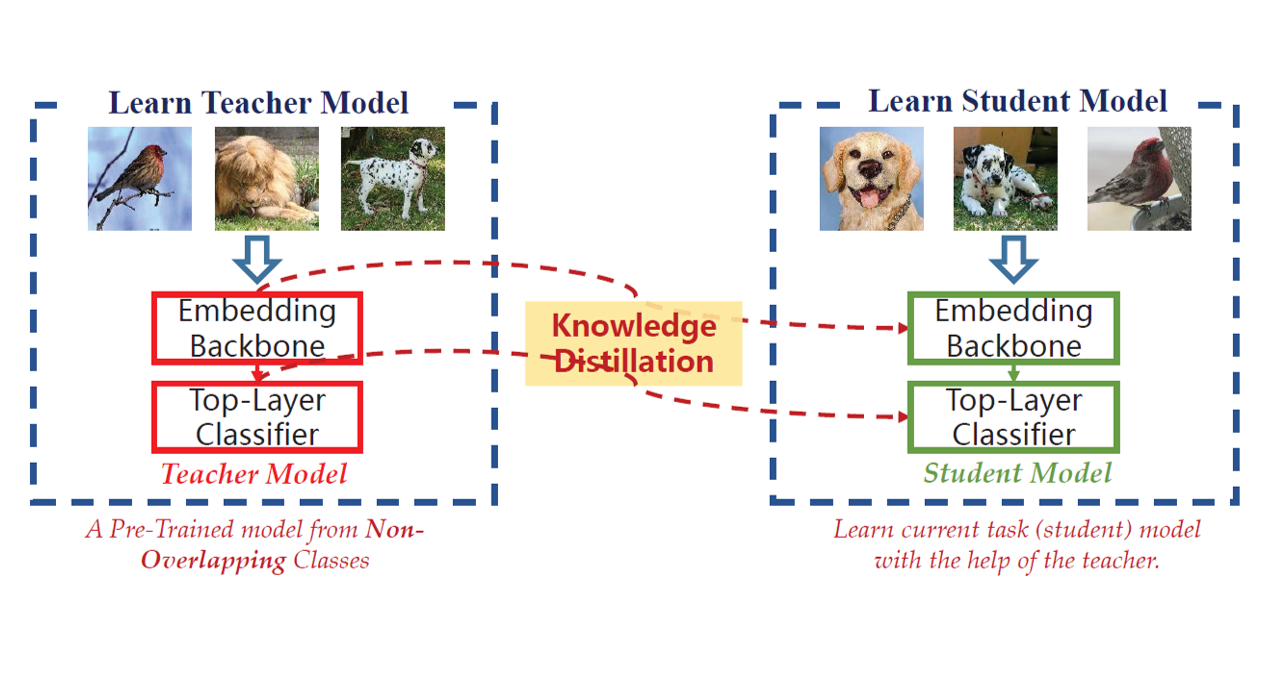

To reuse the cross-task knowledge, we distill the comparison ability and the local classification ability of the embedding and the top-layer classifier from a teacher model, respectively. |

|

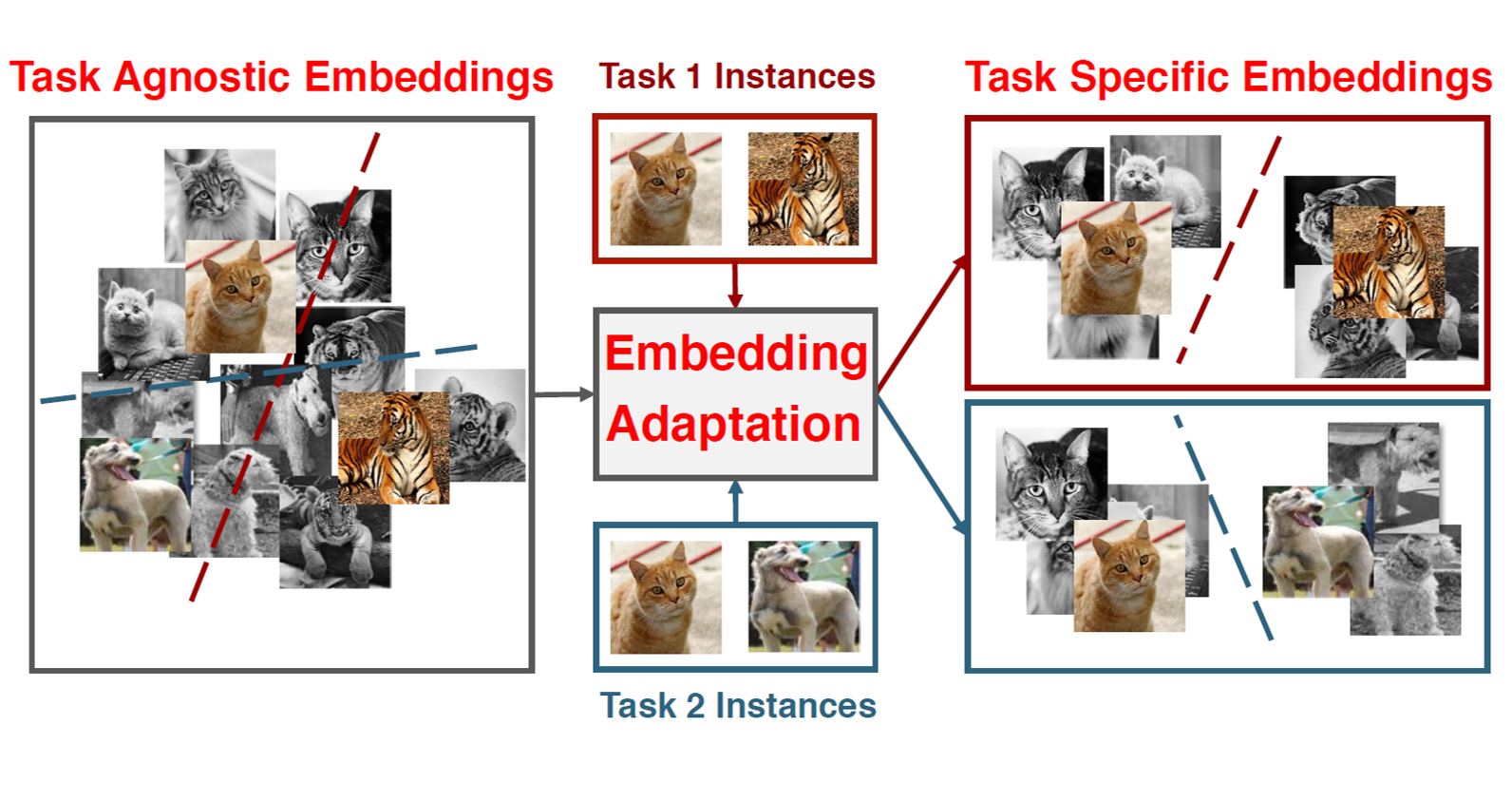

For few-shot learning, we employ a type of self-attention mechanism to transform the embeddings from task-agnostic to task-specific in both seen and unseen classes. |

|

By rethinking meta-learning as a kind of supervised learning, we can borrow supervised learning tricks for the meta-learning paradigm. |

|

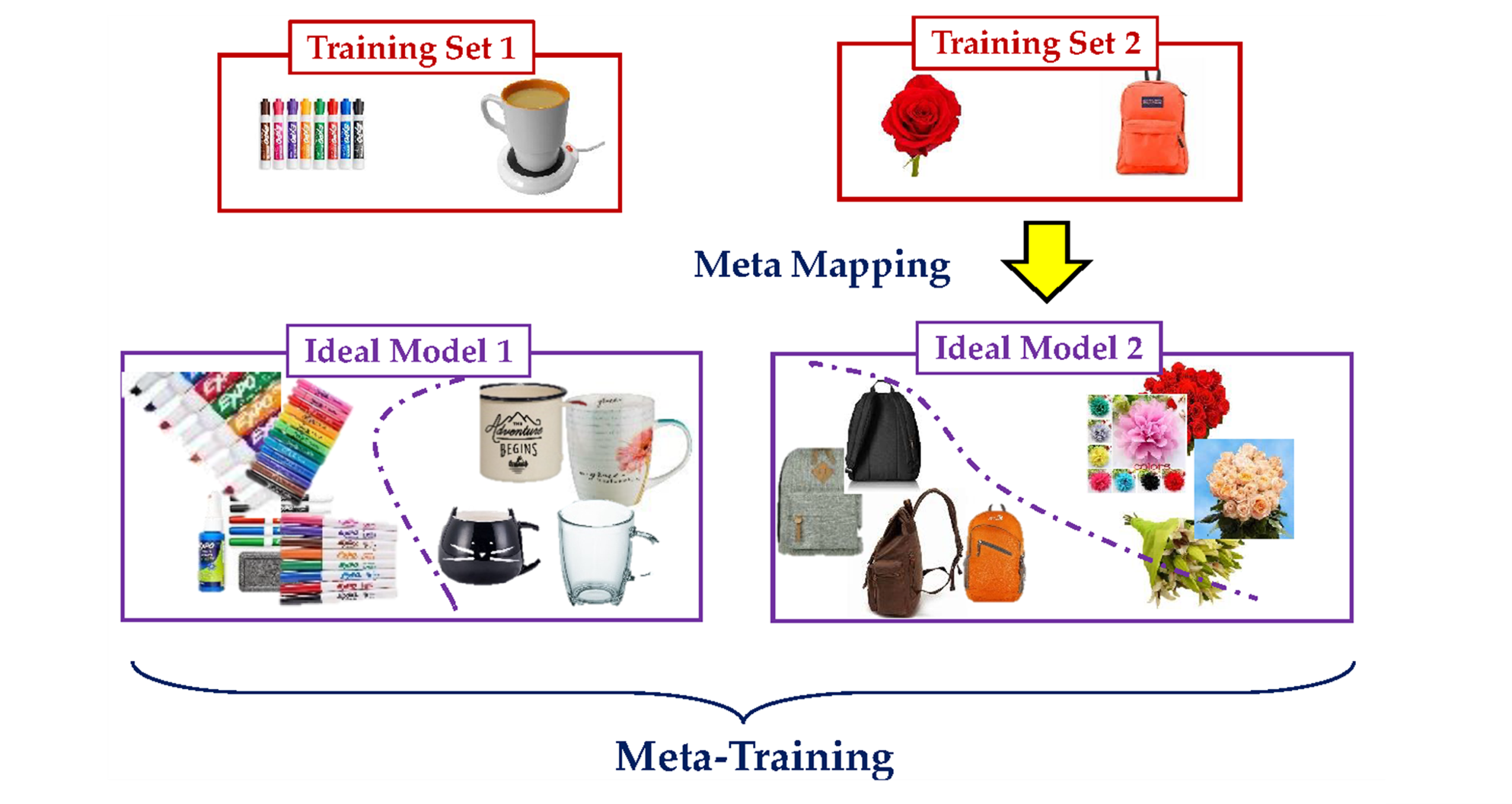

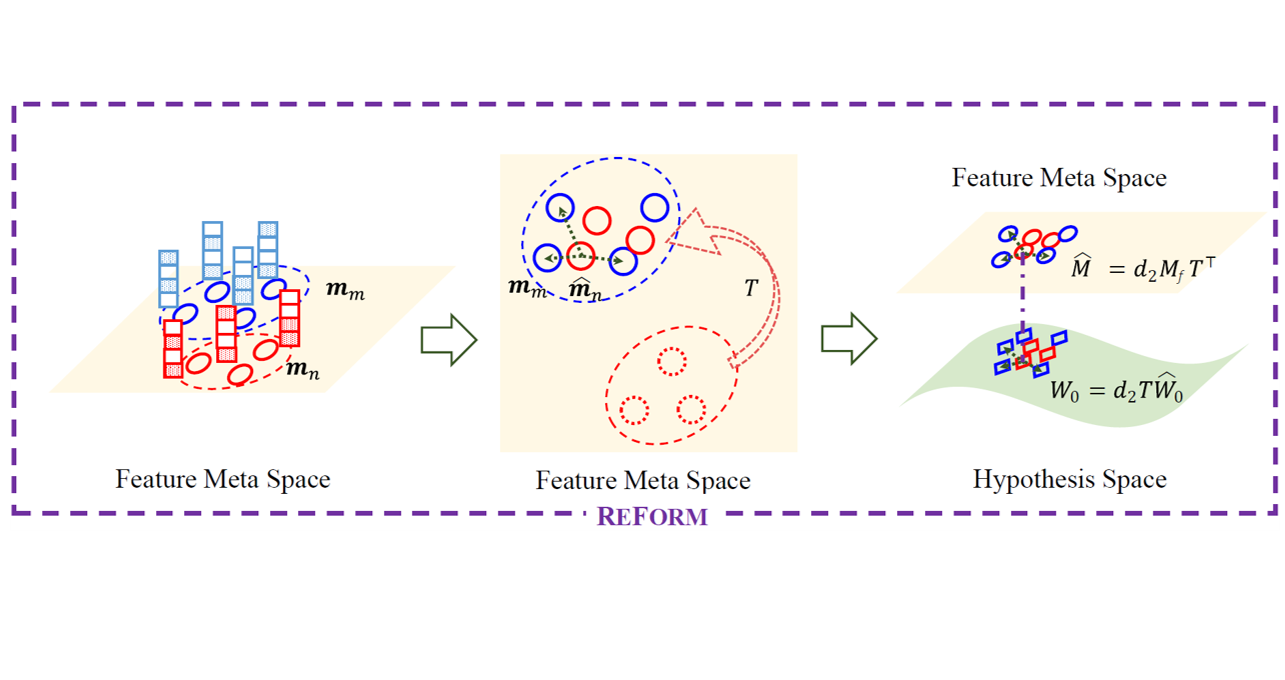

The reusability and evovability of a model are anlayzed in this paper. The proposed framework generates meta features and reuses model across heterogeneous feature domains. |

|

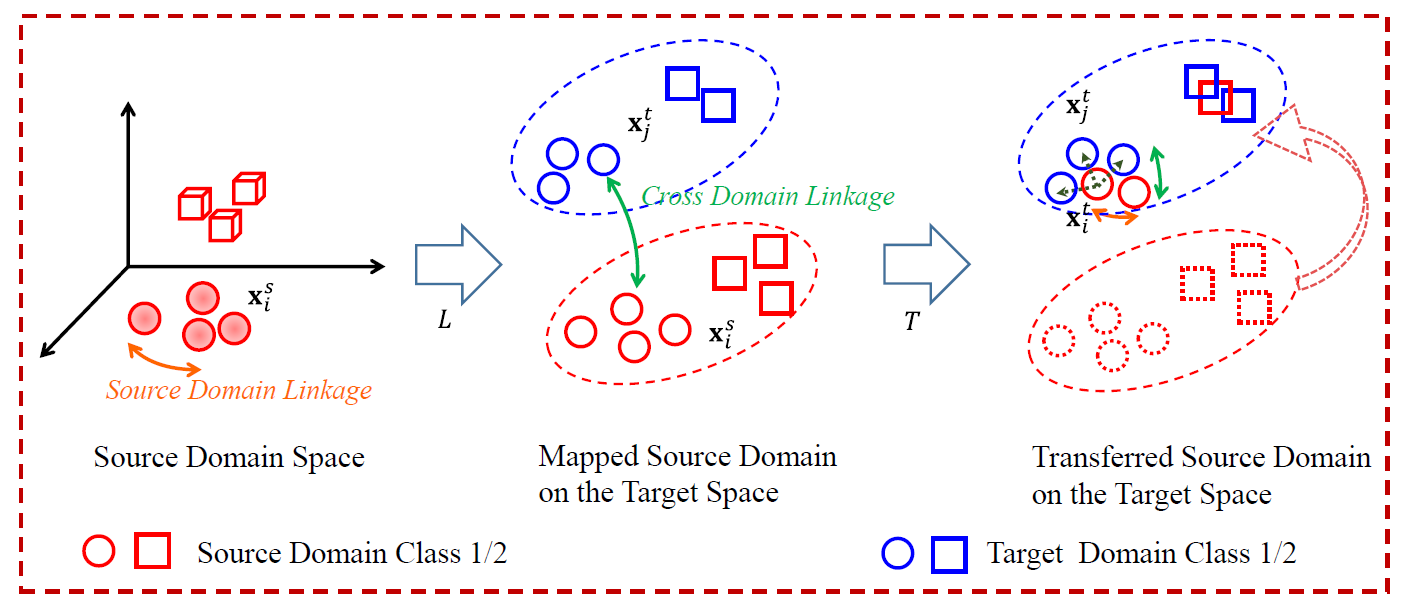

We deal with a specific problem for Optimal Transport Domain Adaptation. Our method extends the ability of OTDA to heterogeneous domains. |

|

A robust Mahalanobis distance metric learning approach dealing with both instance and side-information uncertainty effectively. |

|

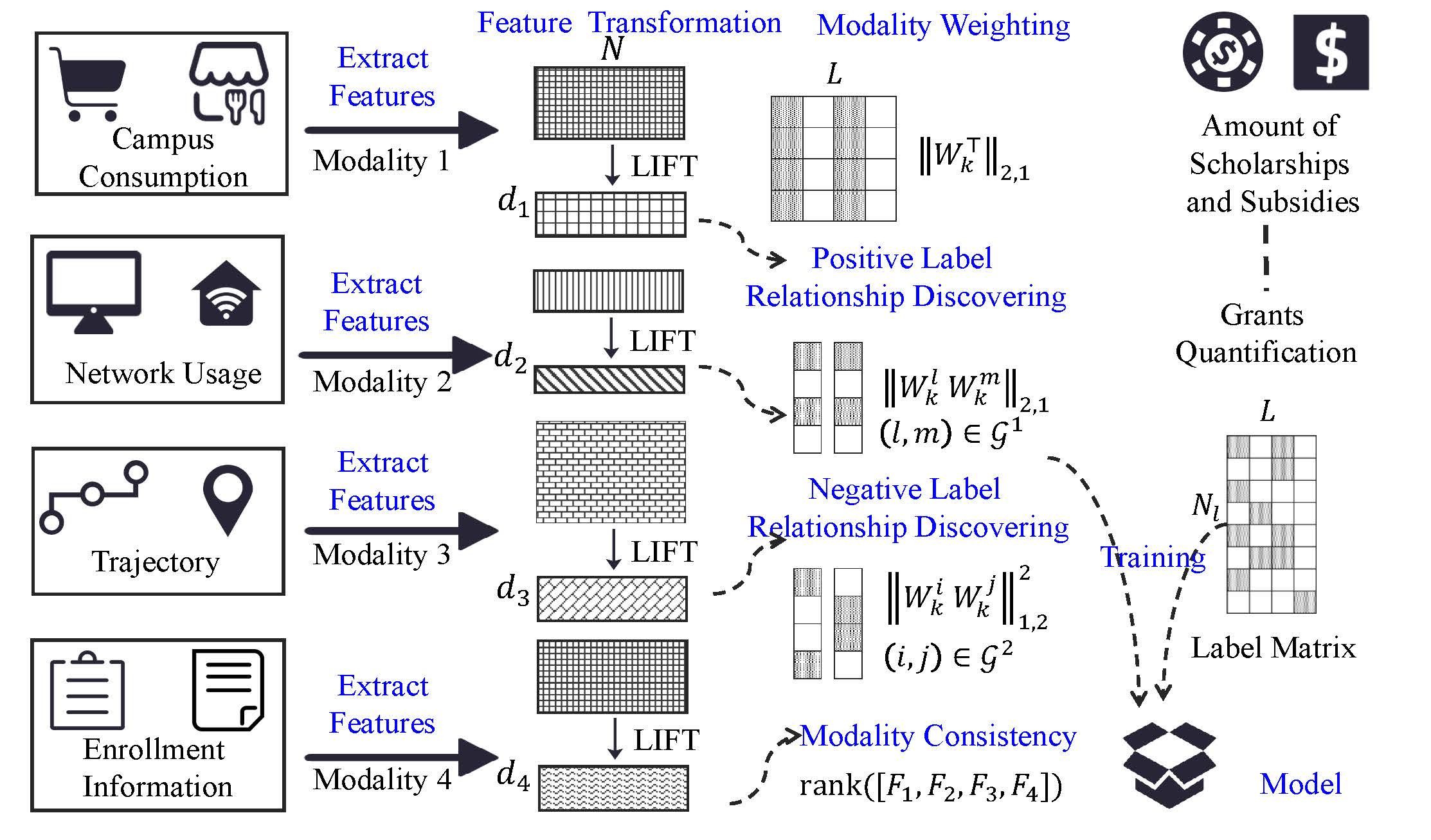

A multi-modal and multi-label method dealing with real-world college student scholarships and subsidies granting task. |

|

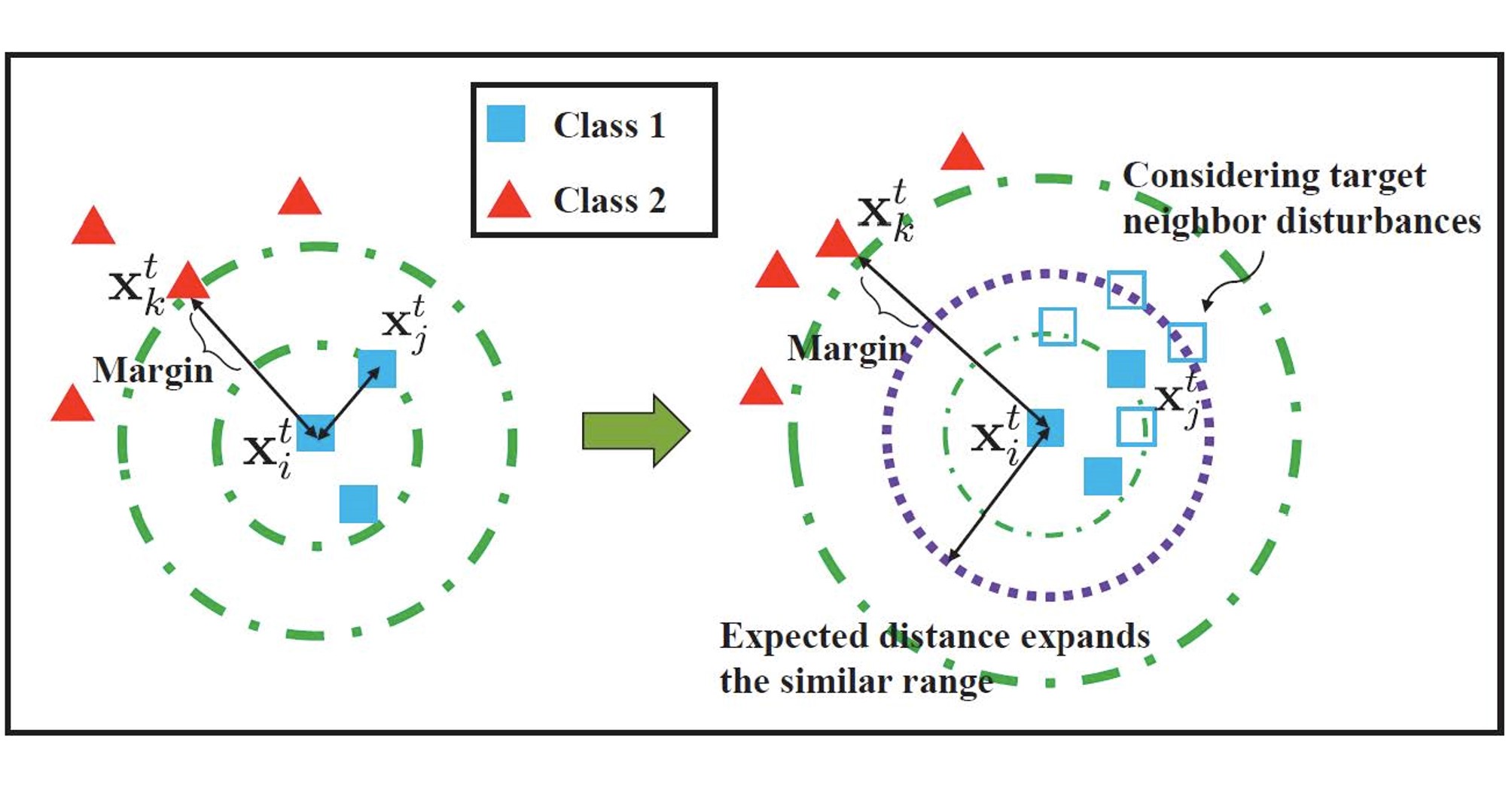

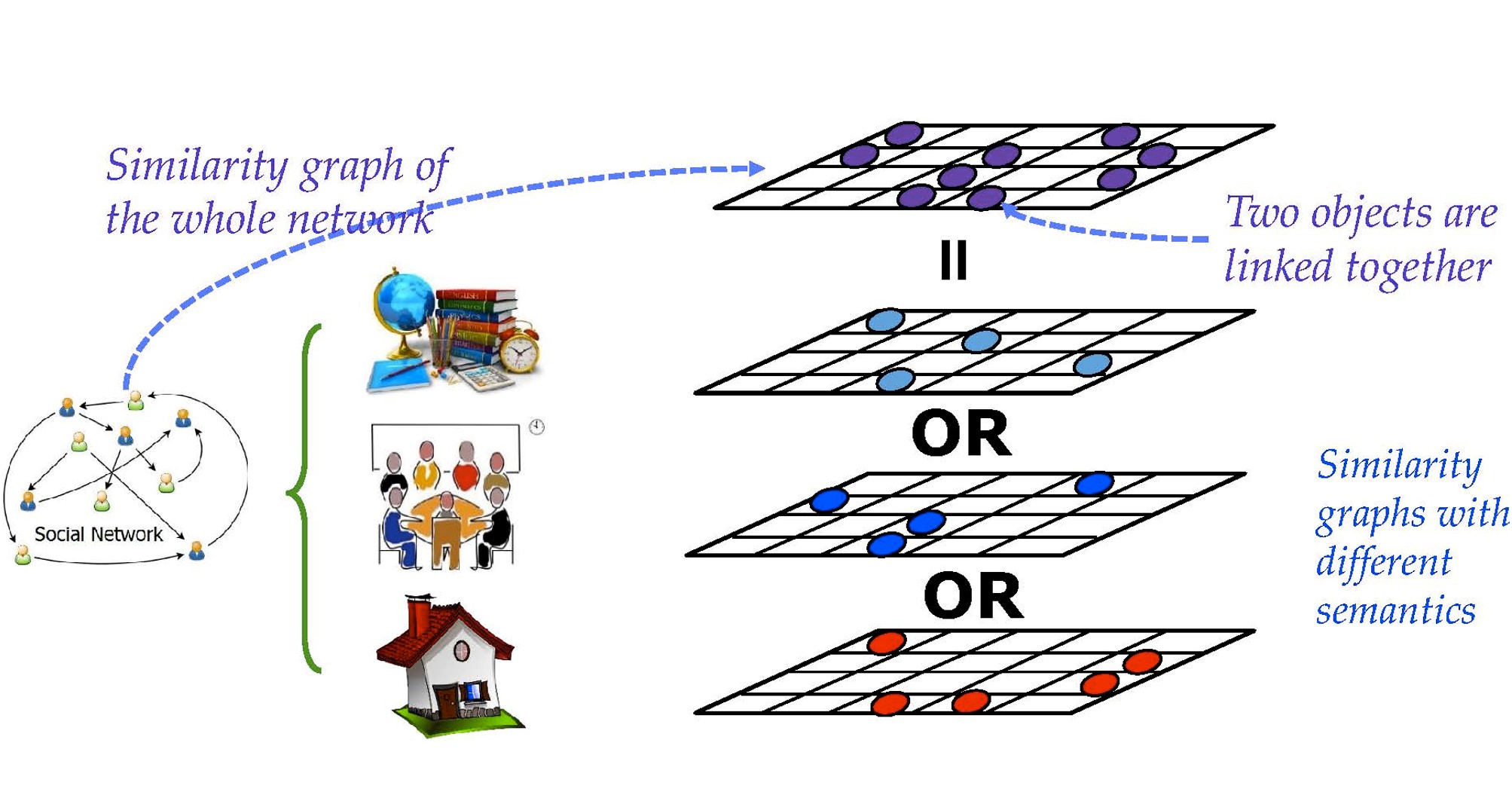

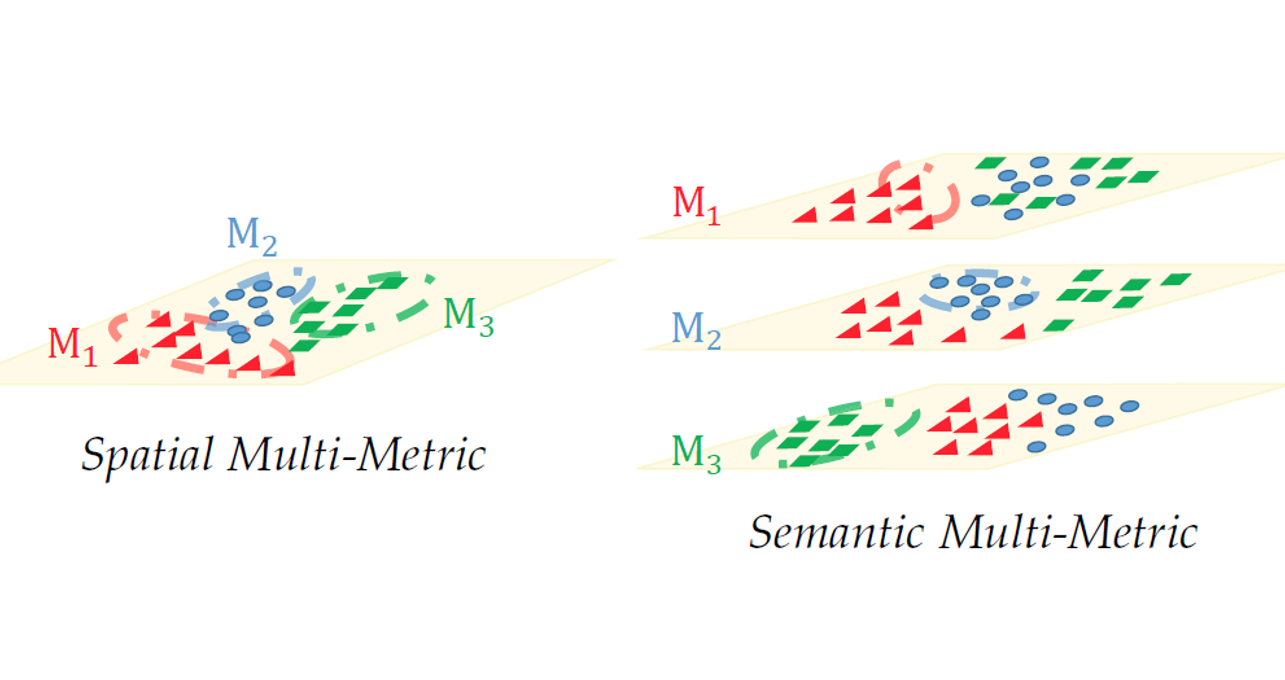

A unified multi-metric learning approach discovering various types of semantics under objects linkages. Besides, we provide a unified solver with theoretical guarantee. |

|

|

A Bayesian perspective of distance metric learning, which can infer metric inductively. |

|

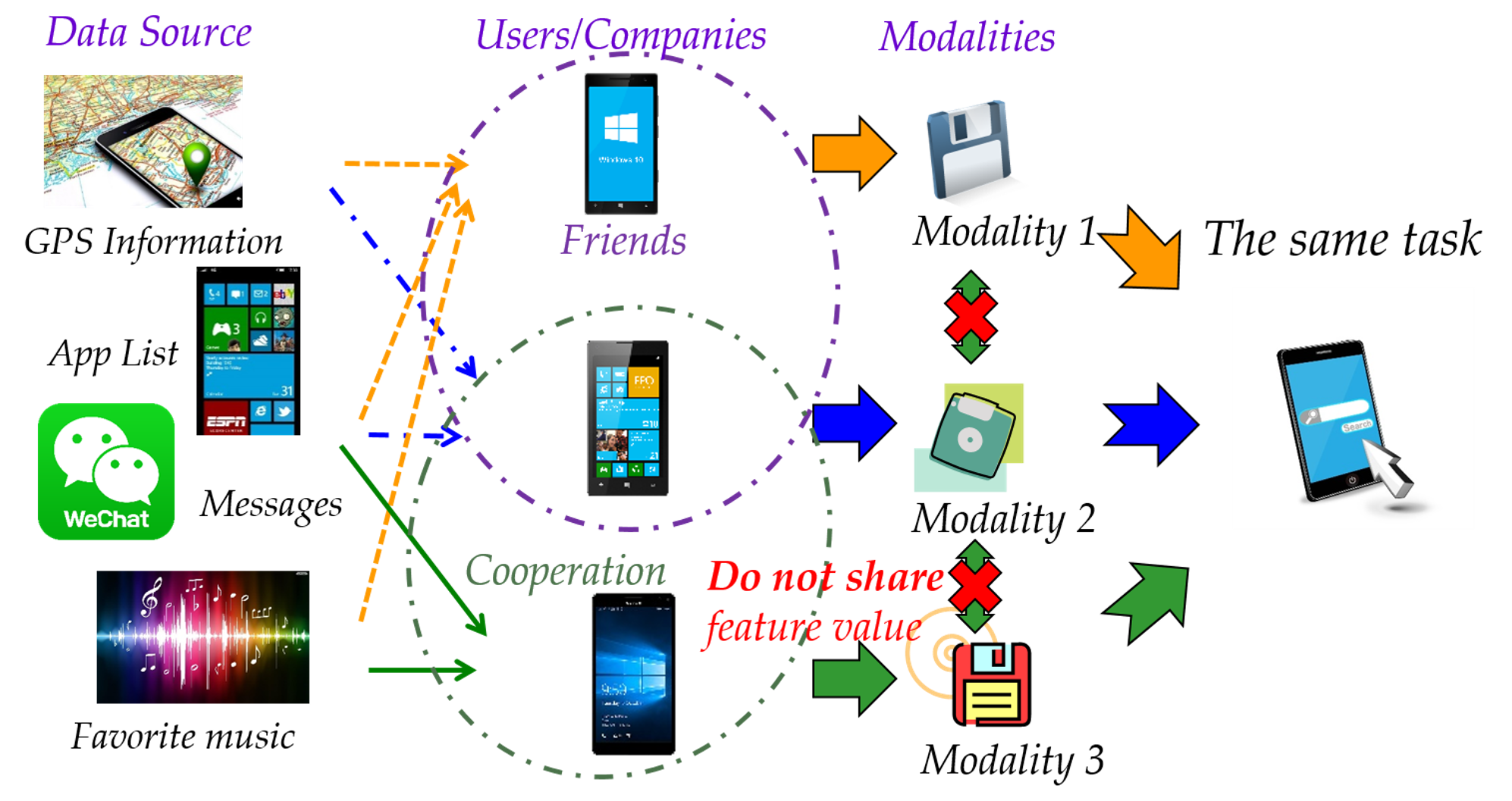

A novel rank consistency criterion is proposed for multi-view learning in a privacy-preserving scenario. |

|

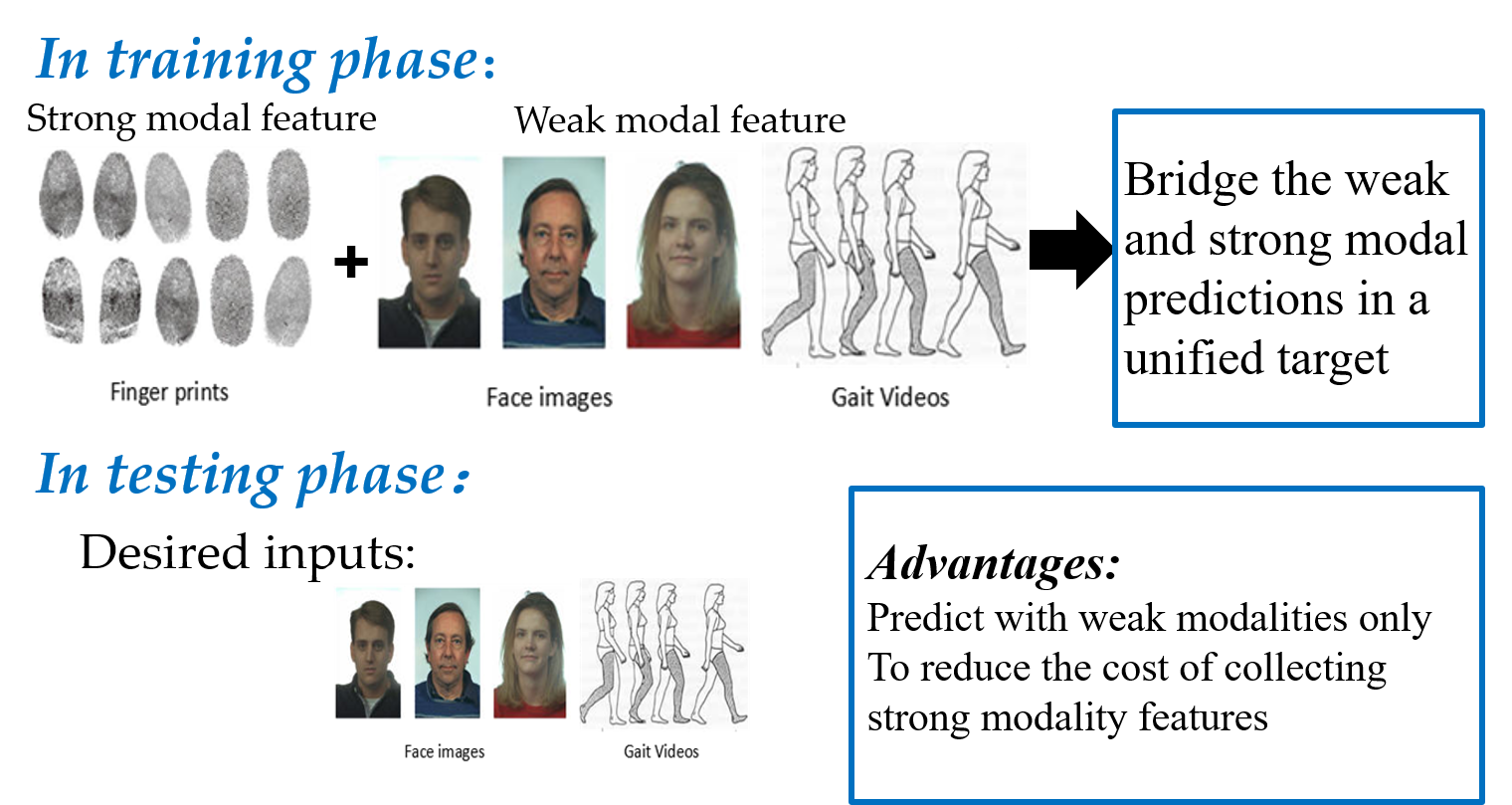

Improve the prediction ability of cheap weak modal feature with the help of its strong counterpart. |

Publications (Journal Papers)

|

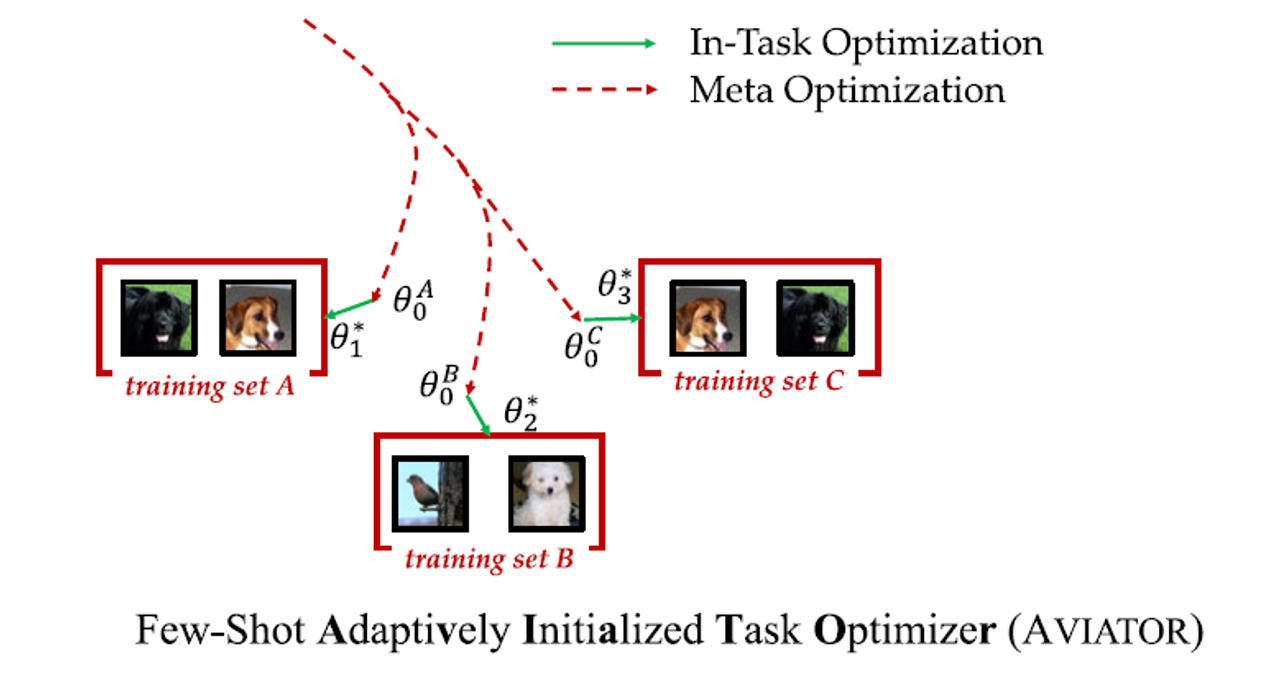

A practical meta-learning approach which efficiently adapts the task-specific initialization to an effective classifier. |

|

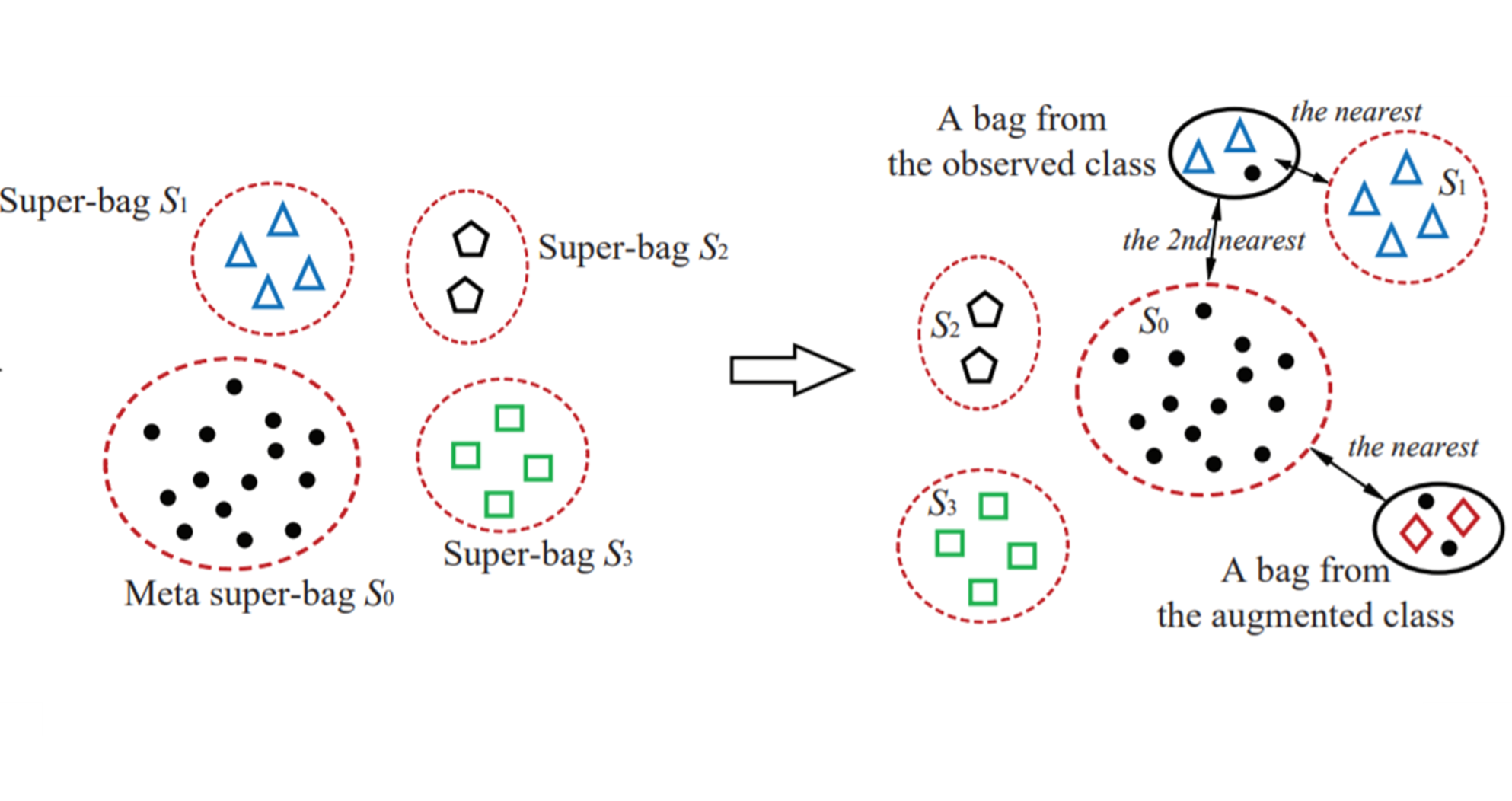

A local metric learning approach to deal with the emerging novel class in multi-instance learning tasks. |

|

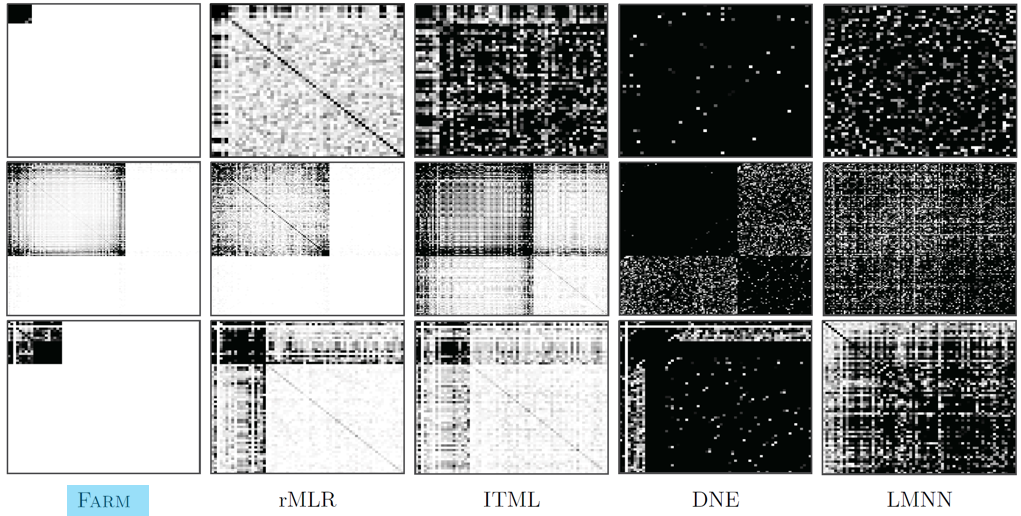

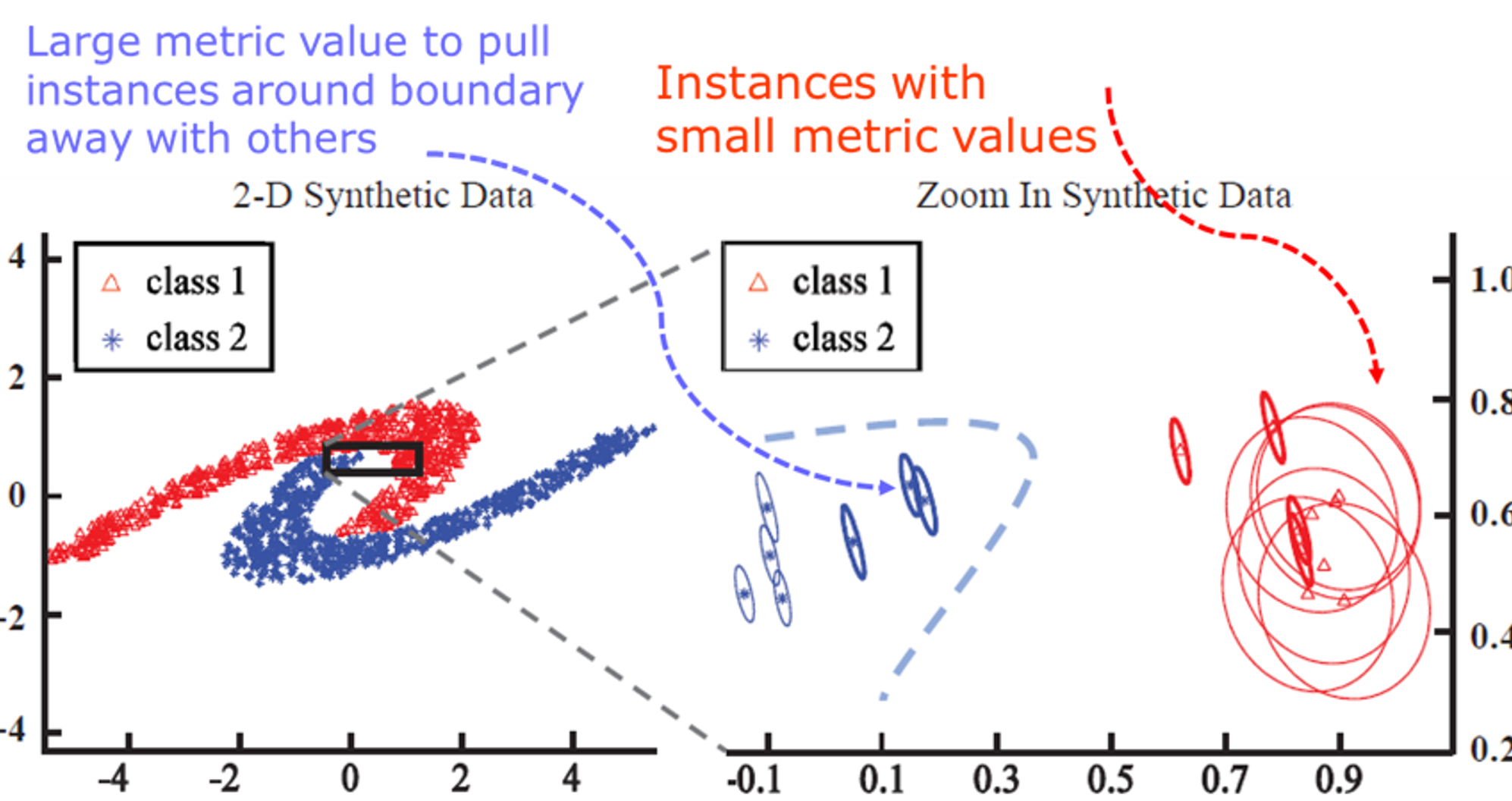

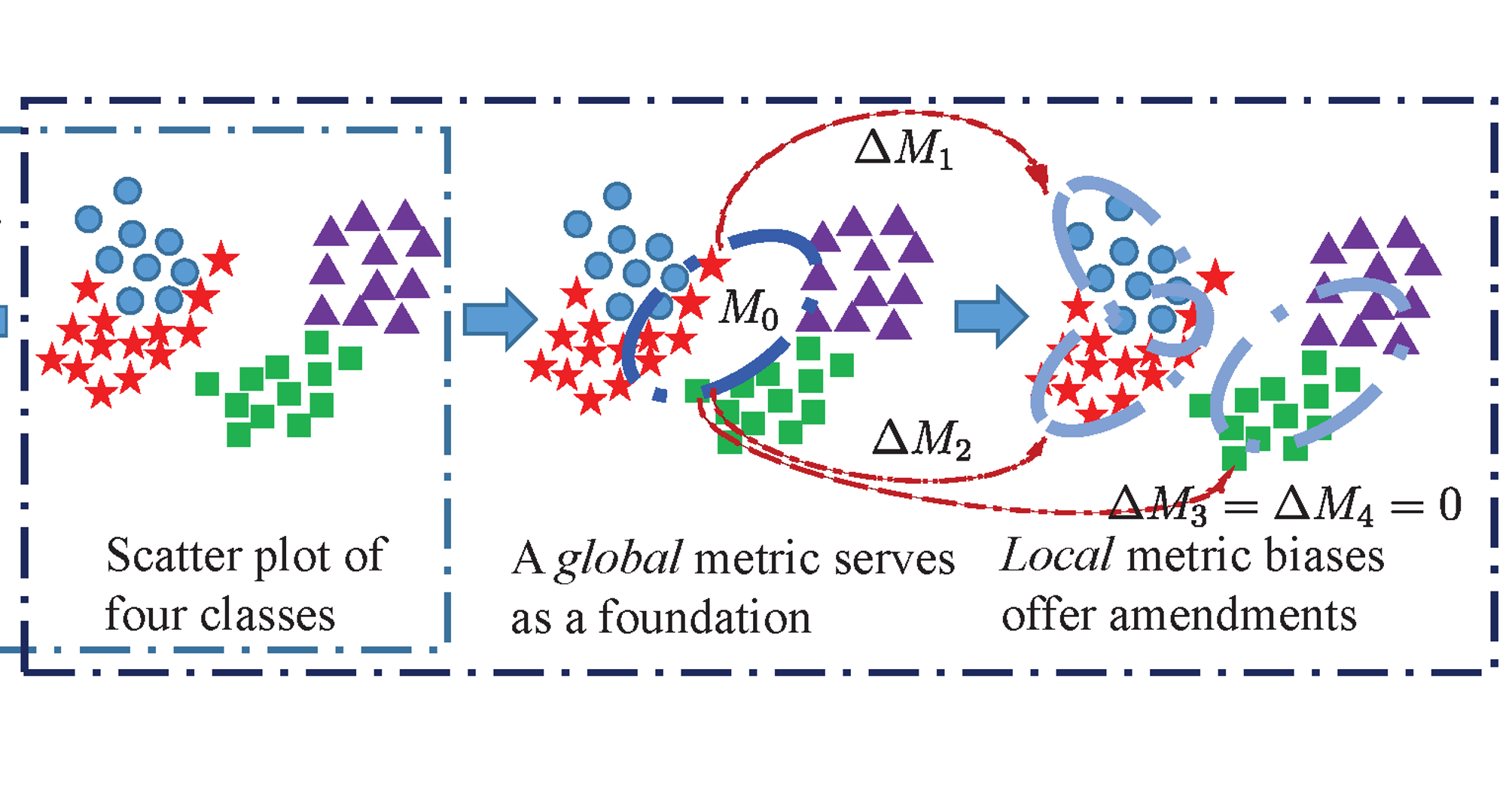

By learning local metrics based on the global one, we try to adaptively allocate local metrics for heterogeneous data. |

|

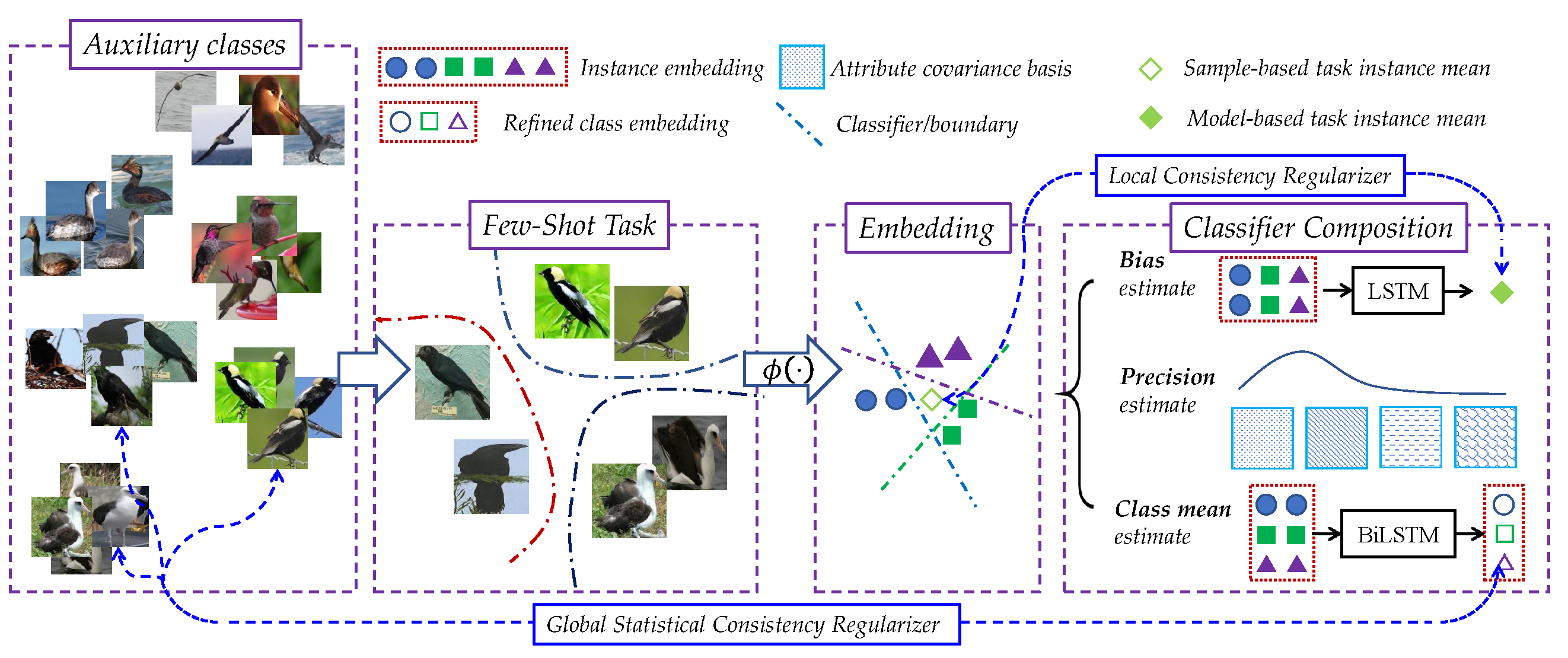

We propose to compose classifiers inspired by the closed form of the least square loss, which fits learning with limited training examples. |

|

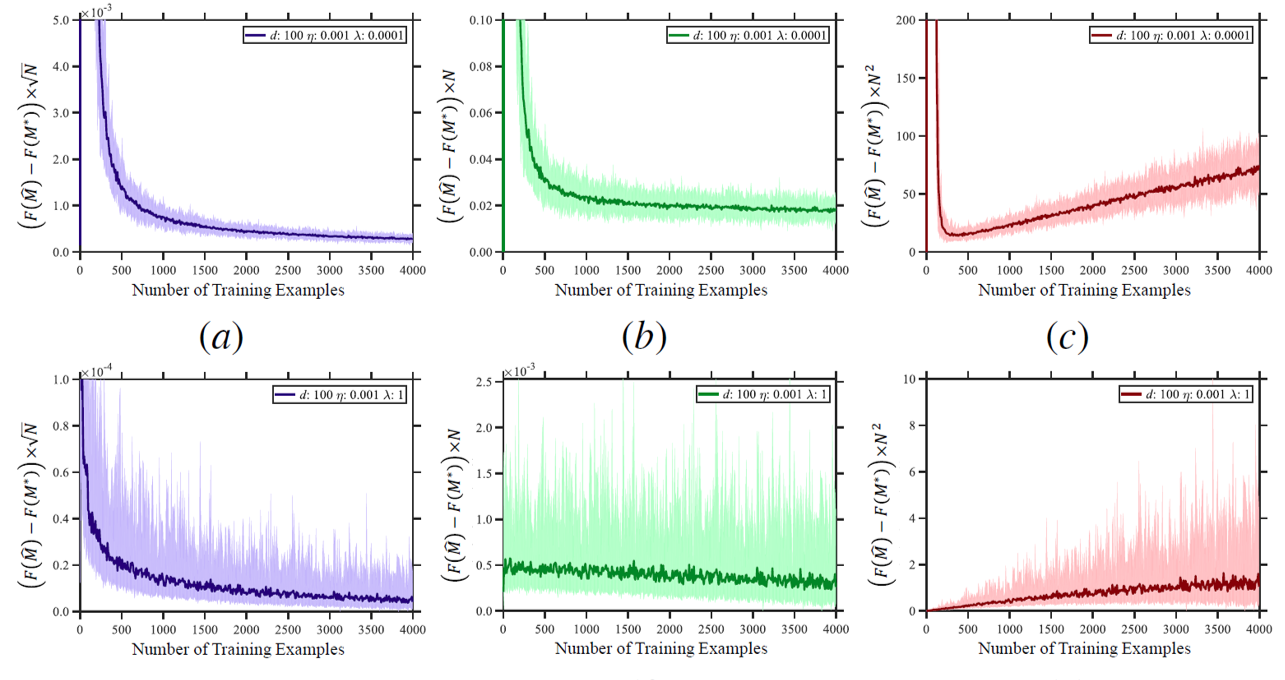

Theoretical analysis of distance metric learning with fast generalization rate |

|

This manuscript extends our NIPS work. The concept of semantic metric, generalization analysis, and deep extension are introduced to get a more general framework. |

Journal and Conference Reviewer

TPAMI, TKDE, TKDD, TNNLS, Neurocomputing, AAAI 2021, IJCAI 2021, ECML/PKDD 2021, NeurIPS 2020, ICDM 2020, CVPR 2020, IJCAI 2020, NeurIPS 2019, CVPR 2019, ICCV 2019, IJCAI 2019, AAAI 2019, ACML 2019, ICLR 2019, NeurIPS 2018, ACML 2018, AAAI 2018, IJCAI 2018, CIKM 2017, IJCAI 2017, KDD 2017, PAKDD 2017, SDM 2017, AISTATS 2017, AAAI 2017, NIPS 2016, IJCAI 2016, ICPR 2016, AAAI 2015, IJCAI 2015, PAKDD 2015

Course

-

Digital Singal Processing. (For undergraduate and graduate students, Autumn, 2020)

-

Introduction to Machine Learning. (With Prof. Zhi-Hua Zhou and Prof. De-Chuan Zhan; For undergraduate and graduate students, Spring, 2020)

Correspondence

Office: Room A205, Yifu Building, Xianlin Campus of Nanjing University

Address: Han-Jia Ye

National Key Laboratory for Novel Software Technology

Nanjing University, Xianlin Campus Mailbox 603

163 Xianlin Avenue, Qixia District, Nanjing 210046, China

- 叶翰嘉, 詹德川. 度量学习研究进展. 中国人工智能学会通讯,2017,12:02-07. [Paper]

- Xiaochuan Zou, Han-Jia Ye, De-Chuan Zhan. Image Classification and Concept Detection based on Strong and Weak Modality (in chinese with english abstract). Journal of Nanjing University, 2014,02:228-234.

| 叶翰嘉 Han-Jia Ye (H.-J. YE) Assistant Researcher LAMDA Group School of Artificial Intelligence Nanjing University, Nanjing 210023, China. Email: yehj [at] lamda.nju.edu.cn |

|

|