Quanshi Zhang ![]() Associate Professor John Hopcroft Center for Computer Science School of electronic information and electrical engineering Shanghai Jiao Tong University Email: zqs1022 [AT] sjtu.edu.cn [知乎]

Associate Professor John Hopcroft Center for Computer Science School of electronic information and electrical engineering Shanghai Jiao Tong University Email: zqs1022 [AT] sjtu.edu.cn [知乎]

招生 Prospective Ph.D., Master, and undergraduate students: I am looking for highly motivated students to work together on interpretability of neural networks, unsupervised and weakly-supervised learning, graph mining, and other frontier topics in machine learning and computer vision. Please read “写给学生” and send me your CV and transcripts.

Biography (Curriculum Vita) Currently, I am an associate professor at the Shanghai Jiaotong University. Before that, I received the B.S. degree in machine intelligence at the Peking University, China, in 2009. I obtained the M.Eng. degree and the Ph.D. degree at University of Tokyo, in 2011 and 2014, respectively, under the supervision of Prof. Ryosuke Shibasaki. In 2014, I became a postdoctoral associate at the University of California, Los Angeles, under the supervision of Song-Chun Zhu.

Now, I am leading a group for explainable AI.

Research Interests My research interests range across computer vision, machine learning, robotics, and data mining. I have published top-tier journal and conference papers in these four fields, which include topics of deep learning, graph theory, unsupervised learning, object detection, 3D reconstruction, 3D point cloud processing, knowledge mining, and etc.

Now, I am leading a group for explainable AI. The related topics include explainable CNNs, explainable generative networks, unsupervised semanticization of pre-trained neural networks, and unsupervised/weakly-supervised learning of neural networks. I aim to end-to-end learn interpretable models and/or unsupervisedly transform the black-box knowledge representation of pre-trained neural networks into a hierarchical and semantically interpretable model. Meanwhile, I also expect strong interpretability can ensure high transferability of features and help unsupervised/weakly-supervised learning from small data.

Tutorials & invited talk

IJCAI 2020 Tutorial on Trustworthiness of Interpretable Machine Learning [Website] [Video]

PRCV 2020 Tutorial on Robust and Explainable Artificial Intelligence [Website]

ICML 2020 Online Panel Discussion: “Baidu AutoDL: Automated and Interpretable Deep Learning”

A few selected studies

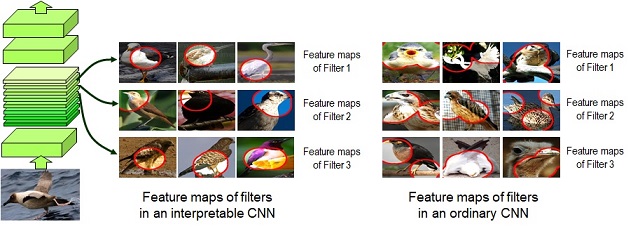

1. Interpretable Convolutional Neural Networks. We add additional losses to force each convolutional filter in our interpretable CNN to represent a specific object part. In comparisons, a filter in ordinary CNNs usually represents a mixture of parts and textures. We learn the interpretable CNN without any part annotations for supervision. Clear semantic meanings of middle-layer filters are of significant values in real applications.

Activation regions of two convolutional filters in the interpretable CNN through different frames.

Activation regions of two convolutional filters in the interpretable CNN through different frames.

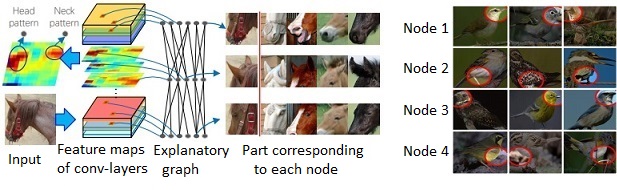

2. Explanatory Graphs for CNNs. We transform traditional CNN representations to interpretable graph representations, i.e., explanatory graphs, in an unsupervised manner. Given a pre-trained CNN, we disentangle feature representations of each convolutional filter into a number of object parts. We use graph nodes to represent the disentangled part components and use graph edges to encode the spatial relationship and co-activation relationship between nodes of different conv-layers. In this way, the explanatory graph encodes the potential knowledge hierarchy hidden inside middle layers of the CNN.

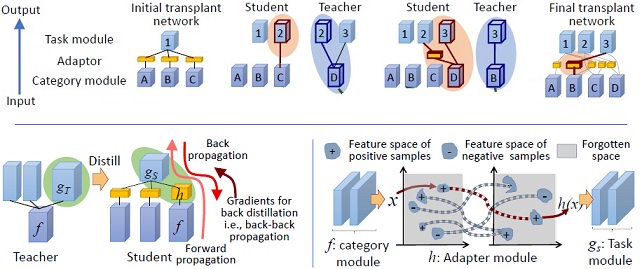

3. Network Transplanting. We merge several networks that are pre-trained for different categories and different tasks to build a generic, distributed neural network in an un-/weakly-supervised manner. Like building LEGO blocks, incrementally constructing a generic network by asynchronously merging specific neural networks is a crucial bottleneck for deep learning and is of special values in real applications. Transplanting pre-trained network modules to a generic network without affecting the generality of existing modules presents significant challenges to state-of-the-art algorithms.

Professional Activities

Workshop Co-chair:

Workshop on Explainable AI at CVPR 2019

Workshop on Network Interpretability for Deep Learning at AAAI 2019 (http://networkinterpretability.org)

Workshop on Language and Vision at CVPR 2018 (http://languageandvision.com/)

Workshop on Language and Vision at CVPR 2017 (http://languageandvision.com/2017.html)

Journal Reviewer: International Journal of Computer Vision, Journal of Machine Learning Research, IEEE Transactions on Knowledge and Data Engineering, IEEE Transactions on Multimedia, IEEE Transactions on Signal Processing, IEEE Signal Processing Letters, IEEE Robotics and Automation Letters, Neurocomputing