1. 中国科学院大学数学科学学院, 北京 100049;

2. 航天信息股份有限公司技术研究院, 北京 100195

摘要: 支持向量机作为机器学习中一个经典的分类算法,一直广受数据科学家的喜爱。无论是处理线性可分还是非线性可分数据,传统的支持向量机能够很好地解决二分类问题。针对给定的样本,支持向量机通过最大化最小间隔得到最佳的决策分界面,从而实现对新样本的类别预测。然而现实中的数据更为复杂多样,一方面数据的类别往往多于两个,近年不乏有优秀的多分类支持向量机算法出现;另一方面不同领域的数据的特征集中可能存在相对特殊的变量(称之为主变量,targeted variable),需要将其挑选出来并加以特殊处理,以保持主变量对最终分类结果的重要影响。考虑这两个方面,提出基于角度的变系数多分类支持向量机(TLAMSVM)模型以解决含有主变量的多分类问题。它使用具备更好几何解释能力的基于角度的间隔最大分类框架完成多分类,并引入变系数模型,通过选择合适的局部光滑函数处理主变量对模型的影响。把基于角度的变系数多分类支持向量机分别应用到模拟数据集和真实数据集上。数值结果显示,相比没有使用变系数思想或基于角度的多分类框架的多分类支持向量机,TLAMSVM模型具有更高的预测准确度。

关键词: 局部光滑多分类支持向量机基于角度的间隔最大分类框架

Machine learning, as a new research orientation, plays a significant role in the age of big data. A widely used classification method of machine learning is support vector machine(SVM)[1]. It is a kind of classic margin-based classifier, which seeks for the most appropriate hyperplane in the feature space and solves the optimization problem in the form of (loss+regularization)[2]. In the last decades, SVM and its derived algorithms have held an important place in many fields[3] on account of its perfect prediction accuracy and computational efficiency.

Typical SVM is good at handling binary problems with one discriminant function. As for its theoretical property, it has been proved to have good asymptotic convergence rates and Fisher consistency. Nevertheless, in realistic applications, multi-category problems are more common than binary ones. As an illustration, diagnosis for cancer patients would be divided into several categories by measuring grade malignancy of cancer cells or tissues. So, multi-category support vector machine(MSVM) is proposed. Apart from conventional methods such as one-versus-one(OvO) and one-versus-rest(OvR)[4-5], which train a sequence of binary SVMs, there are still many simultaneous multi-classification methods, which consider k classes in a single optimization simultaneously, learn k fitting functions, and make classification decision based on specific prediction rule, such as Crammer and Singer[6-7] etc. However, using only (k-1) functions should be adequate for simultaneous multi-classification methods since binary classifiers only need one function, and thus the classification function space is reduced to (k-1)-dimension by adding a sum-to-zero constraint,

Apart from expanding the numbers of category for SVM, researching the interrelation of covariant variables is a worthy direction to study as well. For example, SVM is a popular algorithm in disease research to predict disease risk status of patients. However Wei et al.[11] mentioned that some variables themselves might make little contribution to prediction but play significant roles when they are coordinated with other correlated variables. So the method that uses local linear smoothing SVM on the entire feature variable space is proposed by Ladicky and Torr[12]. Since it does not accomplish smoothing technique successfully in high-dimensional feature space, Chen[13] developed a targeted local smoothing kernel weighted support vector machine (KSVM), which only needs the data in targeted dimension to be plentiful. It achieves an age-dependent prediction rule to discriminate disease risk via optimizing a kernel weighted problem. It gets quite good results on binary classification problems. It is worth applying such a local weighted tool to MSVM.

In this study, we mainly make two contributions. Firstly, we combine angle-based multi-category classification framework of RAMSVM model and targeted local smoothing method of KSVM model, and propose a new MSVM model, targeted local angle-based multi-category support vector machine(TLAMSVM), which offers a better classification prediction for certain data sets. Secondly, TLAMSVM model provides more detailed analysis for a sample according to its value of targeted variable. Before introducing TLAMSVM model, some fundamental knowledge of RAMSVM model and KSVM model will be described in section 2, which are the basis of TLAMSVM model. In section 3, the detailed presentation of TLAMSVM model will be shown, followed by its computational process of coordinate descent method. In section 4, we will show some experimental results in four simulated and real data sets and give some illustrations to demonstrate the strengths of TLAMSVM model. At the end, we will conclude the article with discussions in section 5.

1 Background and related work1.1 Reinforced angle-based multi-category support vector machine(RAMSVM)As for k-class data sets, the angle-based large-margin classification framework defines a k-vertex simplex in (k-1) dimensional space, W1, W2, …, Wk, which correspond to each class,

| $W_{j}=\left\{\begin{array}{ll}{(k-1)^{-\frac{1}{2}} \boldsymbol{\mathit{1}}, } & {j=1} \\ {-\frac{1+\sqrt{k}}{(k-1)^{3 / 2}} \boldsymbol{\mathit{1}}+\sqrt{\frac{k}{k-1}} e_{j-1}, } & {2 \leqslant j \leqslant k}\end{array}\right.$ |

According to the regular form of a large-margin classification algorithm, we add a penalty term J(·) to the loss function. So the optimization problem becomes

| ${\min\limits_{f \in F}}\frac{1}{n}\sum\limits_{i = 1}^n l \left( {\left\langle {f\left( {{x_i}} \right), {W_{{y_i}}}} \right\rangle } \right) + \lambda J(f), $ | (1) |

| $\begin{array}{*{20}{l}}{{\min \limits_{f \in F}}\frac{1}{n}\sum\limits_{i = 1}^n {\{ (1 - \gamma )\sum\limits_{j \ne {y_i}} {{{\left[ {1 + < f\left( {{x_i}} \right), {W_j} > } \right]}_ + }} + } }\\{\quad \gamma {{\left[ {(k - 1) - \left\langle {f\left( {{x_i}} \right), {W_{{y_i}}}} \right\rangle } \right]}_ + }\} + \lambda J(f), }\end{array}$ | (2) |

1.2 Kernel weighted support vector machine(KSVM)Take binary medical data as an example, let Y denote the set of labels {1, -1}, which represents whether a subject is at the disease risk or not. Let "age" be the targeted variable U, and variable X contains other features. Now the purpose is to train a classifier which depends on age information. Firstly, we consider making some changes on typical discriminant score as follows:

| $\alpha(U)+\boldsymbol{X}^{\mathrm{T}} \boldsymbol{\beta}(U), $ | (3) |

After choosing an appropriate loss function, the targeted local smoothing kernel weighted support vector machine (KSVM) model trains a classifier via solving

| $\begin{array}{*{20}{c}}{\mathop {\min }\limits_{\alpha \left( {{u_0}} \right), \beta \left( {{u_0}} \right)} \sum\limits_i {{K_{{h_n}}}} \left( {{U_i} - {u_0}} \right) \cdot }\\{l\left\{ {{Y_i}, {X_i};\alpha \left( {{u_0}} \right), \beta \left( {{u_0}} \right)} \right\} + \lambda \left\| {\beta \left( {{u_0}} \right)} \right\|, }\end{array}$ |

After getting the estimated values of coefficients

2 Methodology and computationIn this section we firstly illuminate how to design multi-category local smoothing kernel weighted optimization problem in TLAMSVM model. Then we list out some main computational steps of TLAMSVM model using coordinate descent method. We take linearly separable case as example, since to non-separable case, we can use kernel trick.

2.1 Targeted local angle-based multi-category support vector machine(TLAMSVM)Assume that there are n observations, each of which has p+1 features, one of which is the targeted variable U. For simplicity, the intercepts are included in X. Denote the value of each feature of ith observation as Xi=(1, Xi(1), Xi(2), …, Xi(p))T. X(j)=(X1(j), X2(j), …, Xn(j)) representing the value of jth feature for all observations. Therefore the sample matrix Xp*n could be written as

| $\mathit{\boldsymbol{X}} = \left( {{X_1}, {X_2}, \cdots , {X_n}} \right) = {\left( {1, {X^{(1)}}, {X^{(2)}}, \cdots , {X^{(p)}}} \right)^{\rm{T}}}.$ | (4) |

| ${f_q}\left( {{u_0}, \mathit{\boldsymbol{X}}} \right) = {\mathit{\boldsymbol{X}}^{\rm{T}}}{\mathit{\boldsymbol{\beta }}_q}\left( {{u_0}} \right)\;\;\;(q = 1, \cdots , k - 1), $ | (5) |

| $\begin{array}{*{20}{l}}{\mathop {\min }\limits_{f \in F} \sum\limits_i {{K_{{h_n}}}} \left( {{U_i} - {u_0}} \right)\{ (1 - \gamma )}\\{\sum\limits_{j \ne {y_i}} {{{\left[ {1 + \left\langle {f\left( {{u_0}, {X_i}} \right), {W_j}} \right\rangle } \right]}_ + }} + }\\\gamma\left[(k-1)-\left\langle f\left(u_{0}, X_{i}\right), W_{y_{i}}\right\rangle\right]_{+} \}+\frac{\lambda}{2} J(f), \end{array}$ | (6) |

| $\begin{array}{c}{-\left\langle f\left(u_{0}, X_{i}\right), W_{j}\right\rangle> 1 \text { and } 2-k+} \\ {\left\langle f\left(u_{0}, X_{i}\right), W_{y_{i}}\right\rangle> 1}, \end{array}$ |

| $\begin{array}{c}{-\left\langle f\left(u_{0}, X_{i}\right), W_{j}\right\rangle> 1-\xi_{i, j}, (i=1, \cdots, n)}, \\ {2-k+\left\langle f\left(u_{0}, X_{i}\right), W_{y_{i}}\right\rangle> 1-\xi_{i, j}}, \\ {\left(i=1, \cdots, n, j \neq y_{i}\right).}\end{array}$ | (7) |

| $\begin{array}{*{20}{c}}{\mathop {\min }\limits_{{\mathit{\boldsymbol{\beta }}_q}\left( {{u_0}} \right), {\xi _{i, j}}} \frac{{n\lambda }}{2}\sum\limits_{q = 1}^{k - 1} {\mathit{\boldsymbol{\beta }}_q^{\rm{T}}} \left( {{u_0}} \right)\mathit{\boldsymbol{\beta }}_q^{\rm{T}}\left( {{u_0}} \right) + }\\{\sum\limits_i {{K_{{h_n}}}} \left( {{U_i} - {u_0}} \right)\left[ {\gamma {\xi _{i, {y_i}}} + (1 - \gamma )\sum\limits_{j \ne {y_i}} {{\xi _{i, j}}} } \right]}\\{\rm{s}}{\rm{.t}}{\rm{.}}\left\{ {\begin{array}{*{20}{l}}{{\xi _{i, j}} \ge 0(i = 1, \cdots , n, j = 1, \cdots , k)}\\{{\xi _{i, {y_i}}} + \left\langle {f\left( {{u_0}, {X_i}} \right), {W_{{y_i}}}} \right\rangle - (k - 1) \ge 0(i = 1, \cdots , n).}\\{{\xi _{i, j}} - \left\langle {f\left( {{u_0}, {X_i}} \right), {W_j}} \right\rangle - 1 \ge 0\left( {i = 1, \cdots , n, j \ne {y_i}} \right)}\end{array}} \right.\end{array}$ | (8) |

| $\begin{aligned} L=& \frac{n \lambda}{2} \sum\limits_{q=1}^{k-1} \boldsymbol{\beta}_{q}^{\mathrm{T}}\left(u_{0}\right) \boldsymbol{\beta}_{q}\left(u_{0}\right)+\\ & \sum\limits_{i=1}^{n} K_{h_{n}}\left(U_{i}-u_{0}\right)\left[\gamma \xi_{i, y_{i}}+(1-\gamma) \sum\limits_{j \neq y_{i}} \xi_{i, j}\right]-\\ & \sum\limits_{i=1}^{n} \alpha_{i, y_{i}}\left[\xi_{i, y_{i}}+\left\langle f\left(u_{0}, X_{i}\right), W_{j}\right\rangle-(k-1)\right]-\\ & \sum\limits_{i=1}^{n} \sum\limits_{j=1}^{k} \tau_{i, j} \xi_{i, j}-\sum\limits_{i=1}^{n} \sum\limits_{j \neq y_{i}} \alpha_{i, j}\\ &\left[\xi_{i, j}-\left\langle f\left(u_{0}, X_{i}\right), W_{j}\right\rangle- 1\right]. \end{aligned}$ |

| $\begin{aligned} L=& \frac{n \lambda}{2} \sum\limits_{q=1}^{k-1} \boldsymbol{\beta}_{q}^{\mathrm{T}}\left(u_{0}\right) \boldsymbol{\beta}_{q}\left(u_{0}\right)+\\ & \sum\limits_{i=1}^{n} \sum\limits_{j=1}^{k}\left[A_{i, j}-\tau_{i, j}-\alpha_{i, j}\right] \xi_{i, j}+\sum\limits_{i=1}^{n} \alpha_{i, y_{i}}(k-1)+\\&{\sum\limits_{i = 1}^n {\sum\limits_{j \ne {y_i}} {{\alpha _{i, j}}} } - \sum\limits_{i = 1}^n {{\alpha _{i, {y_i}}}} \left\langle {f\left( {{u_0}, {X_i}} \right), {W_{{y_i}}}} \right\rangle + }\\&{\sum\limits_{i = 1}^n {\sum\limits_{j \ne {y_i}} {{\alpha _{i, j}}} } \left\langle {f\left( {{u_0}, {X_i}} \right), {W_j}} \right\rangle , }\end{aligned}$ | (9) |

Let the last two parts of (10) as L1 and L2,

| $\begin{aligned} L_{1} &=-\sum\limits_{i=1}^{n} \alpha_{i, y_{i}}\left\langle f\left(u_{0}, X_{i}\right), W_{y_{i}}\right\rangle \\ &=-\sum\limits_{i=1}^{n} \sum\limits_{q=1}^{k-1} \alpha_{i, y_{i}}\left(\boldsymbol{X}_{i}^{\mathrm{T}} \boldsymbol{\beta}_{q}\left(u_{0}\right)\right) W_{y_{i}, q} \\ L_{2} &=\sum\limits_{i=1}^{n} \sum\limits_{j \neq y_{i}} \alpha_{i, j}\left\langle f\left(u_{0}, X_{i}\right), W_{j}\right\rangle \\ &=\sum\limits_{i=1}^{n} \sum\limits_{q=1}^{k-1} \sum\limits_{j \neq y_{i}} \alpha_{i, j}\left(\boldsymbol{X}_{i}^{\mathrm{T}} \boldsymbol{\beta}_{q}\left(u_{0}\right)\right) W_{j, q} .\end{aligned}$ |

| $\begin{array}{*{20}{l}}{\frac{{\partial L}}{{\partial {\xi _{i, j}}}} = {A_{i, j}} - {\tau _{i, j}} - {\alpha _{i, j}} = 0}\\{(i = 1, \cdots , n;j = 1, \cdots , k), }\end{array}$ | (10) |

| $\begin{array}{*{20}{c}}{\frac{{\partial L}}{{\partial {\mathit{\boldsymbol{\beta }}_q}\left( {{u_0}} \right)}} = \lambda {\mathit{\boldsymbol{\beta }}_q}\left( {{u_0}} \right) - \sum\limits_{i = 1}^n {{\alpha _{i, {y_i}}}} {W_{{y_i}, q}}{X_i} + }\\{\sum\limits_{i = 1}^n {\sum\limits_{j \ne {y_i}} {{\alpha _{i, j}}} } {W_{j, q}}{X_i} = 0.}\end{array}$ | (11) |

| ${\mathit{\boldsymbol{\beta }}_q}\left( {{u_0}} \right) = \frac{1}{{n\lambda }}\left[ {\sum\limits_{i = 1}^n {{\alpha _{i, {y_i}}}} {W_{{y_i}, q}}{X_i} - \sum\limits_{i = 1}^n {\sum\limits_{j \ne {y_i}} {{\alpha _{i, j}}} } {W_{j, q}}{X_i}} \right]$ | (12) |

| $X_{i}^{\mathrm{T}}=-\frac{\lambda \boldsymbol{\beta}_{q}^{\mathrm{T}}}{\sum_{i=1}^{n}\left(\sum_{j \neq y_{i}} \alpha_{i, j} W_{j, q}-\alpha_{i, y_{i}} W_{y_{i}, q}\right)}.$ |

| $\begin{array}{*{20}{c}}{{L_1} + {L_2} = \sum\limits_{q = 1}^{k - 1} {\sum\limits_{i = 1}^n {\left( {\mathit{\boldsymbol{X}}_i^{\rm{T}}{\mathit{\boldsymbol{\beta }}_q}\left( {{u_0}} \right)} \right)} } \left[ {\sum\limits_{j \ne {y_i}} {{\alpha _{i, j}}} {W_{j, q}} - } \right.}\\{{\alpha _{i, {y_i}}}{W_{{y_{i, q}}}}] = - \lambda \sum\limits_{q = 1}^{k - 1} {\mathit{\boldsymbol{\beta }}_q^{\rm{T}}} \left( {{u_0}} \right){\mathit{\boldsymbol{\beta }}_q}\left( {{u_0}} \right).}\end{array}$ | (13) |

| $L=-\frac{n \lambda}{2} \sum\limits_{q=1}^{k-1} \boldsymbol{\beta}_{q}^{\mathrm{T}}\left(u_{0}\right) \boldsymbol{\beta}_{q}\left(u_{0}\right)+\sum\limits_{i=1}^{n} \sum\limits_{j \neq y_{i}} \alpha_{i, j}.$ | (14) |

| $\begin{array}{*{20}{c}}{\mathop {\min }\limits_{{\alpha _{i, j}}} \frac{1}{{2n\lambda }}\sum\limits_{q = 1}^{k - 1} {{{\left[ {\sum\limits_{i = 1}^n {{\alpha _{i, {y_i}}}} {W_{{y_i}, q}}{X_i} - \sum\limits_{i = 1}^n {\sum\limits_{j \ne {y_i}} {{\alpha _{i, j}}} } {W_{i, q}}{X_i}} \right]}^{\rm{T}}}} }\\{\left[ {\sum\limits_{i = 1}^n {{\alpha _{i, {y_i}}}} {W_{{y_i}, q}}{X_i} - \sum\limits_{i = 1}^n {\sum\limits_{j \ne {y_i}} {{\alpha _{i, j}}} } {W_{i, q}}{X_i}} \right] - }\\{\sum\limits_{i = 1}^n {{\alpha _{i, {y_i}}}} (k - 1) - \sum\limits_{i = 1}^n {\sum\limits_{j \ne {y_i}} {{\alpha _{i, j}}} } }\\{{\rm{ s}}{\rm{.t}}{\rm{.}}\;\;\;0 \le {\alpha _{i, j}} \le {A_{i, j}}.}\end{array}$ | (15) |

Assume that

| $\boldsymbol{w}^{i, j}=\left[w_{1}^{i+1}, \cdots, w_{j-1}^{i+1}, w_{j}^{i}, \cdots, w_{n}^{i}\right]^{\mathrm{T}}.$ |

| $\begin{array}{*{20}{c}}{\mathop {\min }\limits_z f\left( {w_1^{j + 1}, \cdots , w_{j - 1}^{i + 1}, w_j^i + z, w_{j + 1}^i, \cdots , w_n^i} \right) \equiv }\\{\mathop {\min }\limits_z f\left( {{w^{i, j}} + z{\mathit{\boldsymbol{e}}_j}} \right), }\end{array}$ |

Now we present the computational procedure of TLAMSVM using coordinate descent method.

Table

|

Step 1. Initialize the solution vector

Step 2. Assume the estimated value in mth iteration is

| $\begin{aligned} \hat{\boldsymbol{B}}_{j}^{(m)}=&\left(\hat{\alpha}_{j}^{(m)}\left(u_{0}\right), \hat{\boldsymbol{\beta}}_{j}^{(m), (1)}\left(u_{0}\right)\right., \\ & \hat{\boldsymbol{\beta}}_{j}^{(m), (2)}\left(u_{0}\right), \cdots, \hat{\boldsymbol{\beta}}_{j}^{(m), (p)}\left(u_{0}\right) )^{\mathrm{T}}.\end{aligned}$ |

Therefore, in the m+1th step, =1, …, n, j=1, …, k-1, l=1, …, p, we set

| ${G_{i, j}}(z) = \sum\limits_{q < l} {{X_{i, q}}} \hat B_j^{(m + 1), (q)} + z{X_{i, l}} + \sum\limits_{q > l} {{X_{i, q}}} \hat B_j^{(m), (q)}, $ |

| $\begin{array}{c}{F_{i, j, -l}(z)=\left(\boldsymbol{X}_{i}^{\mathrm{T}} \hat{\boldsymbol{B}}_{1}^{(m+1)}, \cdots, \boldsymbol{X}_{i}^{\mathrm{T}} \hat{\boldsymbol{B}}_{j-1}^{(m+1)}\right.}, \\ {G_{i, j}(z), \boldsymbol{X}_{i}^{\mathrm{T}} \hat{\boldsymbol{B}}_{j+1}^{(m)}, \cdots, \boldsymbol{X}_{i}^{\mathrm{T}} \hat{\boldsymbol{B}}_{k-1}^{(m)} )^{\mathrm{T}}}.\end{array}$ |

| $\hat{B}_{j}^{(m+1), (l)}=\operatorname{argmin}_{z}\left[\lambda z^{2}+\frac{1}{n} \Sigma_{i=1}^{n} l\left\{\left\langle F_{i, j, -l}(z), W_{y_{i}}\right\rangle\right\}\right].$ | (16) |

After having

| $\begin{aligned} f_{q}(u, \boldsymbol{X})=& \boldsymbol{X}^{\mathrm{T}} \hat{\boldsymbol{\beta}}_{q}(u)=\left(1, X^{(1)}, X^{(2)}, \cdots, X^{(p)}\right)\cdot \\ &\left(\hat{\alpha}_{q}(u), \hat{\boldsymbol{\beta}}_{q}^{(1)}(u), \cdots, \hat{\boldsymbol{\beta}}_{q}^{(p)}(u)\right)^{\mathrm{T}}. \end{aligned}$ |

| $f(u, \boldsymbol{X})=\left(f_{1}(u, \boldsymbol{X}), f_{2}(u, \boldsymbol{X}), \cdots, f_{k-1}(u, \boldsymbol{X})\right).$ |

| $\hat y = {{\mathop{\rm argmin}\nolimits} _{j \in \{ 1, \cdots , k\} }}\angle \left( {f\left( {{u^\prime }, {X^\prime }} \right), {W_j}} \right).$ |

It is worth noting that when dealing with non-linear problems, TLAMSVM model needs both Mercer kernel function K(·) and local smoothing kernel function khn(·). The difference is obvious, the former is used to construct non-linear decision boundaries, while the latter is used to aggregate information near u0. In numerical experiments, we use linear Mercer kernel, gaussian Mercer kernel and polynomial Mercer kernel as Mercer kernel function. And uniform kernel, gaussian kernel and Epanechikov(short as ep) kernel function as local smoothing kernel function.

3.1 Simulation resultsWe generate two simulated data sets: S1 and S2. Data in S1 has 1 000 samples with 3-class label and 3 features. Data in S2 has 1 500 samples with 5-class label and 7 features. We carry out 10 simulation runs for each setting and the final results are averaged values. We generate targeted variable Ui from U(0, 1) for both S1 and S2. And the label of S1 is defined as (17). As for S2, we use the same method to generate label, due to limitation of space, the formula wouldn't be listed in this article.

| $Y_{i}=\left\{\begin{array}{ll}{1} & {\text { if } U_{i}^{2}+U_{i}-1+\epsilon_{i} \leqslant U_{\frac{1}{3}}} \\ {2} & {\text { if } U_{\frac{1}{3}} \leqslant U_{i}^{2}+U_{i}-1+\epsilon_{i} \leqslant U_{\frac{2}{3}}} \\ {3} & {\text { if } U_{i}^{2}+U_{i}-1+\epsilon_{i}>U_{\frac{2}{3}}}\end{array}\right.$ | (17) |

| ${\mathit{\boldsymbol{X}}_i}|{Y_i}, {U_i}\sim MVN\left\{ {\beta \left( {{U_i}} \right){Y_i}, {\sigma ^2}I} \right\}$ |

As for S2, we generate features Xi=(Xi1, Xi2, Xi3, Xi4, Xi5, Xi6)T from and σ=2

| $\begin{aligned}& {\mathit{\boldsymbol{X}}_i}\sim MVN\left( {{\mu ^{(k)}}\eta \left( {{U_i}} \right){I_{|{X_i} \in {\rm{ classk }}|}}, {\sigma ^2}I} \right) \\ \boldsymbol{\eta}\left(U_{i}\right)=&\left(2 U_{i}, U_{i}^{2}+2 U_{i}-1, \cos \left(2 \pi U_{i}\right)\right., \\ & 4 \mathrm{e}^{\left(U_{i}-0.3\right)^{2}}, \ln \left(\frac{1}{U_{i}^{2}}\right), \sin \left(3 \pi U_{i}\right) )^{\mathrm{T}}. \end{aligned}$ | (18) |

| $\begin{array}{c}{\mu^{(1)}=(5, 5, 0, 0, 0, 0), \mu^{(2)}=(0, 5, 5, 0, 0, 0)} \\ {\mu^{(3)}=(0, 0, 5, 5, 0, 0), \mu^{(4)}=(0, 0, 0, 5, 5, 0)} \\ {\mu^{(5)}=(0, 0, 0, 0, 5, 5)}\end{array}$ |

Table 1 and Table 2 record the effects of model RAMSVM and TLAMSVM on simulated data S1 and S2.

Table 1

| Table 1 Comparison of f1 score between RAMSVM and TLAMSVM on data set S1 | |||||||||||||||||||||||||||||||||||||||||||||||||

Table 2

| Table 2 Comparison of f1 score between RAMSVM and TLAMSVM on data set S2 | |||||||||||||||||||||||||||||||||||||||||||||||||

Since RAMSVM model does not need to use local smoothing kernel function to build many MSVMs according to targeted variable, it has advantage of computation time. But as for predictive accuracy, TLAMSVM model still has a better performance no matter we use which Mercer kernel or local smoothing kernel function.

Table 3 and Table 4 record f1 scores and training time of KMSVM and TLAMSVM model on simulated data S1 and S2.

Table 3

| Table 3 Comparison of f1 score between KMSVM and TLAMSVM on data sets S1 and S2 |

Table 4

| Table 4 Comparison of f1 score between KMSVM and TLAMSVM on data set S2 |

TLAMSVM model provides a better prediction than KMSVM model with same Mercer or local smoothing kernel function and similar training time. The values in Table 5 are the average results of prediction of model RAMSVM and TLAMSVM on S1.

Table 5

| Table 5 Comparison between RAMSVM and TLAMSVM on data set S1 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Here we compare three cases, which have different feature space respectively, Xi1 only, Xi2 only and both of them. We find that the f1 score of TLAMSVM model is much larger than that of RAMSVM model when using single feature variable. So TLAMSVM model does not rely on the number of feature variables in model so much as RAMSVM model does. Likewise, TLAMSVM model has better results with any kernel function in three cases.

3.2 Real data analysisIn this subsection, we demonstrate the classification effects of TLAMSVM model via using two real data sets, Cervical Cancer data and Protein Localization Sites data, named as D1 and D2. Both of them are from University of California Irvine machine learning repository website. The Cervical Cancer data is derived from Universitario de Caracas Hospital. It is collected from 858 patients and it includes demographic information, patients' habits and their historic medical records. The Protein Localization Sites data is derived from a research about an expert system, which uses the information of the amino acid sequence and source origin to predict localization sites of protein. It has 1 484 samples and 10 categories.

3.2.1 Application to Cervical Cancer data setCervical cancer remains one of the leading causes of death among patients with malignant gynecologic disease. It could be caused by a number of factors, for instance, viral infection, the number of sexual behavior, the number of pregnancies, smoking habits etc. From the viewpoint of Computed Aided Diagnosis System, there are several diagnosis methods in the detection of pre-cancerous cervical lesions. Here are the four most frequently-used methods in examination, colposcopy using acetic acid-Hinselmann, colposcopy using Lugol iodine-Schiller, cytology and biopsy[14]. The data set records the results of these four tests(Hinselmann, Schiller, cytology and biopsy). From these four tests results, we generate artificially a response variable y∈{1, 2, 3} that reflects the confidence of the diagnosis. When all of the four test results are normal, y=1, when the results of any one or two test are normal, y=2, and y=3 for rest situations. Then we get a 3-category data set.

After some common data preprocessing procedures, we get a data set without missing data nor outliers. And we reduce the number of variables in model from 31 to 12 by calculating the conditional information entropy and information gain of each variable. And we regard feature "age" as targeted variable in this data set. Due to the special property of data, we consider use a combination of undersampling and oversampling techniques (ENN+SMOTE) as balancing tool to process training data. The principle of ENN is to remove the samples whose class is different from class of the majority of its k-nearest neighbor. SMOTE[15] is a relatively basic oversampling method that reduces the differences of number between different categories. For each sample of the minority class, connect this sample between the nearest k samples of it with same category, then there will be k lines, generate one sample randomly from each line, then get k new samples of the minority class.

Table 6 is the numerical results of model TLAMSVM and RAMSVM with different Mercer kernel functions and local smoothing kernel functions on cervical cancer data set. It can be seen that, when using the same local smoothing kernel function, the polynomial Mercer kernel has the best classification accuracy. With the same Mercer kernel function, the Epanechikov and gaussian local kernel function has the relatively better prediction performance. Comparing the average accuracy of TLAMSVM model, the average prediction effect of TLAMSVM model is slightly better than that of RAMSVM model.

Table 6

| Table 6 Summary of accuracy of TLAMSVM and RAMSVM on Cervical Cancer data set | ||||||||||||||||||||||||||||||||||||||||||||||

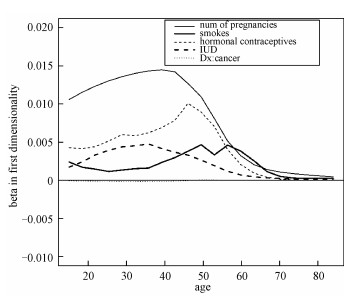

We can get the corresponding estimated coefficient β(u) for any given value of targeted variable u. Figure 1 is the line chart of the coefficient in the first dimension, it shows the variation trend of each coefficient corresponding to 5 feature variables as "age" changes.

Fig. 1

| Download: JPG larger image |

Fig. 1 β1(u) vs. the targeted variable (age) on D1 | |

From Fig. 1 we can see, the influence of feature "Smokes" is small when age is between 20 and 40, but it increases a lot when age becomes larger. It means when facing the young patients, the information about his or her smoking habit is not as important as other variables. However, when judging the middle-aged patients, their smoke habit could offer more information for classification.

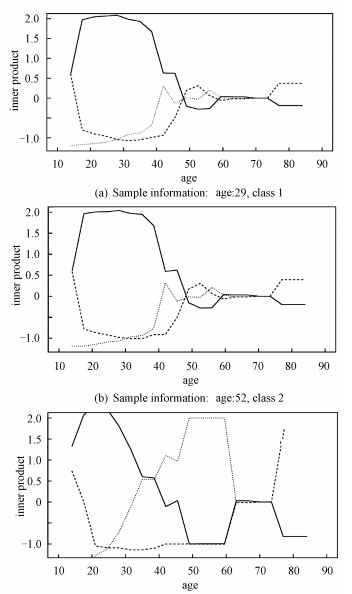

Figure 2 describe the inner product of classification function vector of sample and each simplex (〈f(x), Wj〉, (j=1, 2, 3)) when targeted variable U takes different values.

Fig. 2

| Download: JPG larger image |

solid 〈f(x), W1〉, dashed: 〈f(x), W2〉, dotted:〈f(x), W3〉. Fig. 2 Predictions for the three given samples on D1 | |

From Section 3 we know that the sample will be classified to the category which has the maximal inner product value. So at any fixed age, the class which the top line corresponds to is the prediction result. Fig. 2 reflects that different value for the targeted variable will affect final classification result of 3 given samples. These three subgraphs are the prediction details of three samples, which belong to the class-1, class-2 and class-3 respectively. The age of these these samples are 29, 52, 51 in sequence.

Firstly, we can find that TLAMSVM model gives the correct prediction according to the prediction rules. Secondly, for identical feature variables, different values of the targeted variable will affect the result of determination for that sample. That is to say, with fixed other physiological characteristics, if we assume that the patient is at different ages, it would come out different diagnosis results. Take the third sample as an example, the patient is 51 years old and the true label is 3, which means the patient has high-risk of suffering from cervical cancer. However, we would give an entirely different judgement if we regard the same physiological characteristics values as information from a patient with other age. Patient whose age is from 20 to 30, would be diagnosed as the class-1(no cervical cancer), and patient whose age is over 75, would be diagnosed as class-2(no confirmed). Thus it can be observed that the TLAMSVM model can accurately grasp the essential information of the sample by studying the relationship between the targeted variable and other feature variables to provide better prediction.

3.2.2 Application to Protein Localization Sites data setProtein Localization Sites data set has 8 features, which are scores of some methods for measuring information about protein or amino acid. It has 10 classes to represent the different localization sites of protein, such as CYT(cytosolic or cytoskeletal), NUC(nuclear), MIT(mitochondrial) etc. We use model RAMSVM and TLAMSVM to train data and get classifiers.

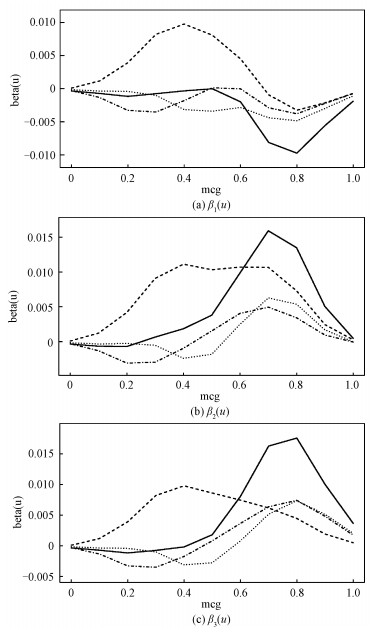

Figure 3 is based on D2 (Protein Localization Sites data).

Fig. 3

| Download: JPG larger image |

solid: feature 'gvh', dashed: feature 'alm', dotted: feature 'mit', dashed-dotted: feature 'nuc'. Fig. 3 Estimated values for the coefficients of the first three dimensions on D2 | |

And we set "mcg"(McGeoch's method for signal sequence recognition) as targeted variable. Because of limitations of space, Fig. 3 only demonstrates the coefficient β(u) in the first, second and third dimension. And these subgraphs are enough to show the variability of coefficients in model TLAMSVM.

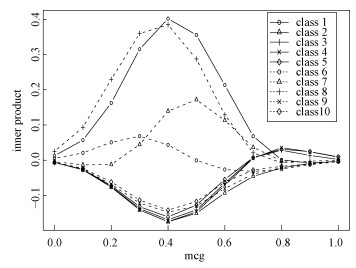

Figure 4 is the inner product graph for a certain sample, whose feature "mcg" equals to 0.45 and real label is class-1. From Fig. 4, we know that the TLAMSVM model gives a correct prediction (class-1) for this sample, and the classification results would be different if the "mcg" value of this sample was different.

Fig. 4

| Download: JPG larger image |

Fig. 4 Predictions for a given sample under different targeted variables (mcg) on D2 | |

Table 7 records the effect of TLAMSVM and RAMSVM model on Protein Localization Sites data set. From Table 7 we can get the same conclusion as Cervical Cancer data. The classification accuracy of TLAMSVM model is better than that of RAMSVM model, consistent with the simulation results. And we can get the same result of classification prediction from the model and its prediction rule.

Table 7

| Table 7 Summary of accuracy of TLAMSVM and RAMSVM on Protein Localization Sites dataset | |||||||||||||||||||||||||||||||||||||||||||||

4 DiscussionIn this study, TLAMSVM model is proposed to solve multi-category classification problems and make a better prediction for different samples. In numerical experiment section, we firstly predict the risk of disease and show its dependency on the age. Building age-specific predictive model helps to study the appropriate time of intervention and find potential features which provides individualized treatment for a certain disease. The fitting coefficient β(u) describes the age sensitivity of each feature variable to disease risk. Then we obtain good results on protein localization sites data and make a more "personalized" prediction for samples whose "mcg" values are different. Finally, the simulation results and real data analysis show that the different selections for Mercer kernel and smoothing kernel functions may lead to minor differences in prediction accuracy.

We consider the feature variables with the targeted variable dependency, but it is easy to ignore the features which have stable effect. Thus, we will add some features with invariant effects to the initial classification function in future work, like α(U)+XTβ(U)+ZTγ.

References

| [1] | Cortes C, Vapnik V. Support vector networks[J]. Machine Learning, 1995, 20(3): 273-297. |

| [2] | Yau G X, Zhang C. Multi-category angle-based classifier refit[EB/OL]. (2016-07-19)[2017-08-12]. https://arxiv.org/abs/1607.05709. |

| [3] | Moguerza J M, Mu?oz A. Support vector machines with applications[J]. Statistical Science, 2006, 21(3): 322-336. DOI:10.1214/088342306000000493 |

| [4] | Allwein E L, Schapire R E, Singer Y. Reducing multiclass to binary:a unifying approach for margin classifiers[J]. Proc International Conference on Machine Learning, San Francisco Ca:Morgan Kaufmann, 2000, 1(2): 9-16. |

| [5] | Hastie T, Tibshirani R. Classification by pairwise coupling[C]//Conference on Advances in Neural Information Processing Systems. MIT Press, 1998: 507-513. |

| [6] | Crammer K, Singer Y. On the algorithmic implementation of multiclass kernel-based vector machines[J]. J Machine Learning Res, 2001, 2(2): 265-292. |

| [7] | Lee Y, Lin Y, Wahba G. Multicategory support vector machines:theory and application to the classification of microarray data and satellite radiance data[J]. Journal of the American Statistical Association, 2004, 99(465): 67-81. DOI:10.1198/016214504000000098 |

| [8] | Zhang C, Liu Y, Wu Z. On the effect and remedies of shrinkage on classification probability estimation[J]. American Statistician, 2013, 67(3): 134-142. DOI:10.1080/00031305.2013.817356 |

| [9] | Zhang C, Liu Y. Multicategory angle-based large-margin classification[J]. Biometrika, 2014, 3(3): 625-640. |

| [10] | Zhang C, Liu Y, Wang J, et al. Reinforced angle-based multicategory support vector machines[J]. Journal of Computational and Graphical Statistics, 2016, 25(3): 806-825. DOI:10.1080/10618600.2015.1043010 |

| [11] | Wei Z, Wang K, Qu H Q, et al. From disease association to risk assessment:an optimistic view from genome-wide association studies on type 1 diabetes[J]. Plos Genetics, 2009, 5(10): e1000678. DOI:10.1371/journal.pgen.1000678 |

| [12] | Torr P H S. Locally linear support vector machines[C]//International Conference on International Conference on Machine Learning. Omnipress, 2011: 985-992. |

| [13] | Chen T, Wang Y, Chen H, et al. Targeted local support vector machine for age-dependent classification[J]. Journal of the American Statistical Association, 2014, 109(507): 1174-1187. DOI:10.1080/01621459.2014.881743 |

| [14] | Fernandes K, Cardoso J S, Fernandes J. Transfer Learning with Partial Observability Applied to Cervical Cancer Screening[M] Pattern Recognition and Image Analysis. 2017: 243-250. |

| [15] | Chawla N V, Bowyer K W, Hall L O, et al. SMOTE:synthetic minority over-sampling technique[J]. Journal of Artificial Intelligence Research, 2002, 16(1): 321-357. |